The rise of micro-LLMs is proving that the future of artificial intelligence doesn’t rely solely on massive, cloud-driven models. Instead, small, efficient, and incredibly fast AI models – known as micro-LLMs—are becoming the leading technology trend heading into 2026. These compact models deliver impressive accuracy, run directly on personal devices, reduce costs, and protect privacy, making them one of the most transformative shifts in the AI landscape. In simple terms: the world is moving toward smaller, smarter AI, and 2026 is the breakthrough year.

Understanding Micro-LLMs in Simple Terms

Micro-LLMs (Micro Language Learning Models) are compact versions of language models designed to:

- Use drastically fewer parameters

- Require minimal computational resources

- Operate efficiently on-device (local hardware)

- Deliver fast responses with low latency

- Maintain reasonable accuracy and usefulness

While models like GPT-4 or Gemini Ultra have hundreds of billions of parameters, micro-LLMs typically range from:

- 500 million to 7 billion parameters

- Some ultra-light versions under 1 billion parameters

This makes them efficient enough to run on:

- Smartphones

- Laptops

- Edge AI devices

- Microservers

- Wearables

- Robotics systems

- Industrial IoT sensors

The combination of speed, privacy, and cost-efficiency is driving explosive adoption.

Why Micro-LLMs Are Gaining Massive Popularity in 2026

1. The Shift Toward On-Device AI

Users want faster responses without relying on the cloud. On-device AI solves:

- Privacy concerns (data never leaves the device)

- Latency issues (instant responses)

- Reliability (no internet needed)

- Cost (no cloud inference fees)

As smartphones and laptops ship with more powerful NPUs (Neural Processing Units), running small models locally becomes easy and affordable.

2. Big Models Are Expensive

Large multimodal LLMs cost millions to train and require expensive GPUs to run. Micro-LLMs flip the equation:

- No GPU cluster needed

- More accessible to startups

- Lower compute = lower environmental impact

- Affordable deployment at scale

For developers and businesses, the cost-cutting benefits are irresistible.

3. Efficiency Matters More Than Size

For many real-world tasks, you don’t need a trillion-parameter model. Micro-LLMs can handle:

- Text generation

- Summaries

- Customer support

- On-device search

- Device-level automation

- Translation

- Coding assistance

- Smart home instruction processing

- Offline chatbots

Their output is “good enough”—fast, accurate, and affordable.

4. The Push Toward Personal AI Agents

2026 is shaping up to be the year of personal AI agents:

- AI secretaries

- Personal task organizers

- Offline copilots

- AI that runs inside apps

- AI embedded in appliances

Micro-LLMs fuel this revolution by making personal AI private, secure, and instant.

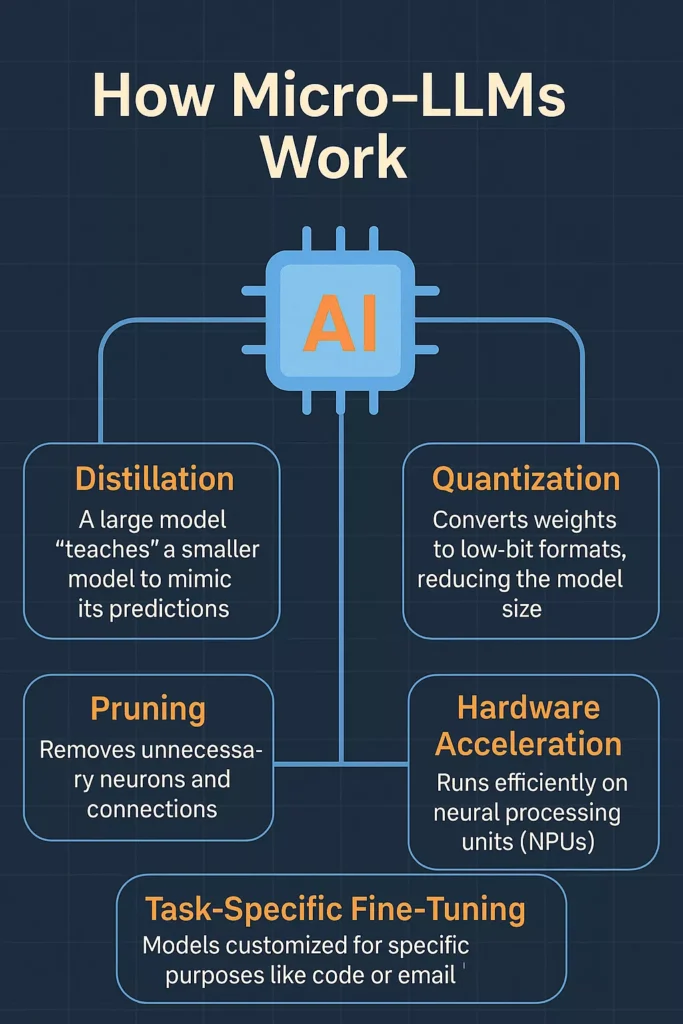

How Micro-LLMs Work: A Beginner-Friendly Explanation

Micro-LLMs rely on a combination of clever engineering techniques that make them lighter and faster without drastically compromising capability.

One of the most important techniques is knowledge distillation, where a large model trains a smaller model by transferring its abilities. Think of it like a master tutor teaching a student who becomes almost as capable but requires far fewer resources.

Another key method is quantization. Here, the AI’s “weights” (which guide its decision-making) are compressed into smaller numerical formats. Instead of using high-precision calculations, the model uses much smaller values that still produce similar results. This significantly reduces storage demands and speeds up computation.

Similarly, pruning removes unnecessary neural connections from the model. Large models often have redundant pathways that don’t contribute meaningfully to accuracy. By removing them, the model becomes lighter and faster.

Finally, modern devices are now equipped with advanced NPUs (Neural Processing Units) specifically designed to accelerate AI tasks. These dedicated chips allow micro-LLMs to run smoothly even on mobile hardware, making the entire on-device AI revolution possible.

Real-World Applications of Micro-LLMs in 2026

Micro-LLMs are not just a technical innovation; they are transforming everyday life. Their real-world impact spans across consumer electronics, businesses, healthcare, education, and beyond.

1. Smarter Smartphones and Personal Devices

Smartphones are becoming AI-first machines. Micro-LLMs allow them to perform tasks like generating replies, summarizing texts, translating languages, analyzing images, and managing schedules—without needing an internet connection. Many brands are already embedding micro-LLMs directly into their operating systems, enabling private and lightning-fast responses.

2. AI-Enhanced Laptops and Workstations

Laptops equipped with on-device AI are reshaping productivity. Writers, developers, designers, students, and remote professionals can now rely on fast offline AI models for tasks like coding assistance, research summaries, document analysis, and creative brainstorming. This removes dependence on cloud services and makes AI tools accessible anytime.

3. Smarter Homes Without Cloud Reliance

Micro-LLMs are empowering smart homes to operate more independently. Instead of sending voice commands to remote servers, devices like smart speakers, thermostats, and home assistants can process requests locally. This boosts security, reduces latency, and allows devices to continue functioning even without internet.

4. Customer Support Reinvented

Businesses are adopting micro-LLM-driven support bots to provide instant, accurate customer responses without the heavy costs associated with cloud-based AI. Since micro-models can run on internal systems, companies gain more control over their data while delivering smoother user experiences.

5. Automotive Intelligence

In cars, micro-LLMs are powering voice assistants, predictive alerts, maintenance recommendations, and personalized driving experiences. Because driving often happens in areas with poor connectivity, local AI models ensure that intelligent features remain consistent and reliable.

6. Next-Generation Healthcare Tools

Wearables and medical devices are becoming smarter through embedded micro-LLMs. They can conduct on-device analysis of health patterns, generate recommendations, and monitor patient conditions—without risking sensitive data through cloud transmission.

7. Robotics and Automation

In factories, warehouses, and service industries, micro-LLMs are making robots more responsive and capable of real-time decision-making. Since these models can run on-device, they allow machines to react instantly to environmental changes, improving both safety and efficiency.

Micro-LLMs and the Human Experience

What makes micro-LLMs truly exciting is how they make technology feel more personal. Because they can operate privately on your device, they’re capable of learning your preferences, writing styles, tone, and daily behavior patterns—all without storing data externally.

Imagine a writing assistant that instantly understands your tone. A fitness AI that shapes routines based on your habits. A personal planning assistant that learns your daily structure. This level of personalization is only possible with on-device intelligence. Micro-LLMs bring AI closer to the user, both physically and functionally.

The Future of Micro-LLMs: What Lies Beyond 2026

The momentum behind micro-LLMs shows no sign of slowing down. As hardware improves and models become more optimized, the next few years will bring even more advanced capabilities.

One clear development is the emergence of hyper-personalized offline AI. Users will have AI agents that adapt uniquely to their preferences, history, and habits—completely privately and without cloud involvement.

Another major evolution will be the rise of AI-first hardware, where phones, laptops, tablets, and wearables are designed around AI processing capabilities. We’re already seeing companies integrate larger NPUs and dedicated memory blocks meant solely for running micro-LLMs. This will make devices up to ten times more efficient at handling on-device intelligence.

Micro-LLMs are also moving rapidly toward becoming multimodal, enabling them to understand not just text but also audio, images, sensor data, and eventually video—all locally. This will significantly enhance their usefulness for applications like smart cameras, voice assistants, real-time editing tools, and autonomous robotics.

Another important change is in energy efficiency. Smaller models consume less power, which means longer battery life and a more eco-friendly AI ecosystem. As AI adoption grows, energy-efficient models will become increasingly important for sustainable technological growth.

Micro-LLMs vs. Large LLMs: Choosing the Right Tool

| Category | Micro-LLMs (Small Models) | Large LLMs (Massive Models) |

|---|---|---|

| Model Size | 500M–7B parameters | 50B–1T+ parameters |

| Speed | Extremely fast, near-instant responses | Slower due to heavy computation |

| Deployment | Runs locally on devices | Requires cloud servers or GPU clusters |

| Cost | Very low—minimal hardware & no cloud fees | High training + high inference costs |

| Privacy | Strong, as data stays on-device | Data often sent to cloud servers |

| Internet Dependency | Works offline | Requires stable internet connection |

| Energy Consumption | Low power usage | High power + cooling requirement |

| Use Cases | Personal AI, mobile apps, IoT, robots, offline assistants | Advanced reasoning, research, creative generation |

| Training Data | Smaller, task-focused datasets | Massive, multi-domain datasets |

| Strengths | Speed, privacy, efficiency, personalization | Deep knowledge, rich reasoning, complex creativity |

| Limitations | Limited depth & context compared to large models | High cost, slower, less private, less accessible |

| Best For | Everyday automation, on-device tasks, edge AI | Complex problem solving, high-end creative tasks |

Why 2026 Is the Breakthrough Year for Micro-LLMs

Several powerful trends are converging, making 2026 the perfect year for micro-LLMs to explode into mainstream usage. Consumer devices are more powerful than ever, equipped with next-generation NPUs. Businesses are searching for cost-efficient AI solutions. Privacy regulations are tightening across the globe, encouraging on-device processing. People want AI that is personal, fast, and secure.

Additionally, major tech players—Apple, Google, Microsoft, Meta, and leading open-source communities—are actively developing and promoting small AI models. Their support accelerates research, provides tools for developers, and pushes micro-LLMs into every corner of the digital world.

With this momentum, micro-LLMs are not just a trend—they’re a major technological shift redefining how humans interact with AI.

Conclusion: The Big Impact of Small AI

The rise of micro-LLMs highlights a powerful truth: intelligence doesn’t have to be enormous. What matters is relevance, speed, personalization, privacy, and accessibility. Micro-LLMs embody all of these qualities, offering a new kind of AI that feels more human, more helpful, and more connected to everyday life.

As we move deeper into 2026 and beyond, small AI models will continue shaping the future of devices, industries, and personal experiences. They bring AI closer to the user, closer to the device, and closer to daily life—quietly powering the next generation of intelligent technology.

FAQs

1. What are Micro-LLMs and how are they different from regular LLMs?

Micro-LLMs are compact, lightweight versions of large language models designed for fast, efficient, and on-device performance. Unlike traditional LLMs that rely on massive cloud resources, micro-LLMs can run locally on personal devices such as smartphones, laptops, or IoT systems. They are smaller in size but optimized to handle everyday tasks quickly and privately.

2. Are Micro-LLMs accurate enough for real-world applications?

Yes. While they may not match the deep reasoning abilities of trillion-parameter models, micro-LLMs are extremely capable for most daily tasks. They perform well in areas like writing assistance, summarization, translation, customer service, and device automation. Their accuracy is continually improving through distillation and fine-tuning techniques.

3. Why are Micro-LLMs becoming popular in 2026?

Micro-LLMs are gaining momentum because they solve three major problems: cost, privacy, and speed. As devices now ship with powerful NPUs, these small models run instantly without relying on the cloud. They also reduce cloud costs for businesses and keep user data private, making them ideal for personal AI agents and smart devices.

4. Can Micro-LLMs run without the internet?

Absolutely. One of their biggest advantages is offline capability. Because micro-LLMs run locally on-device, they do not need a constant internet connection. This makes them reliable in areas with limited connectivity and ideal for users who value privacy and independence from cloud services.

5. Are Micro-LLMs safer than cloud-based AI?

In many ways, yes. Since they process data directly on the user’s device, Micro-LLMs keep information private and prevent sensitive data from being sent to remote servers. This reduces exposure to data leaks and helps meet stricter privacy regulations emerging across the world.