Retrieval-Augmented Generation (RAG) 2.0 is the next-generation AI search system that combines advanced retrieval of accurate information with a context-aware language model to deliver clearer, more precise, and more reliable answers.

It improves on the original RAG approach by using deeper context linking, upgraded retrieval intelligence, dynamic grounding, and better real-time relevance scoring—making AI responses significantly more accurate and trustworthy.

RAG 2.0 is built for a world where users expect AI to not only generate text but understand, reference, and verify information before answering. It bridges the gap between traditional search engines and generative AI by delivering the best of both: trustworthy data and natural language clarity.

What Is RAG 2.0 and Why It Matters

Retrieval-Augmented Generation (RAG) was introduced to solve a major weakness of early LLMs—hallucination. Traditional AI models relied only on trained data, which could be outdated or incomplete. RAG added retrieval, allowing models to fetch relevant documents in real time before generating a response.

RAG 2.0 is the upgraded version that goes beyond simple retrieval.

It uses smarter ranking, deeper context understanding, dynamic filtering, and multi-step reasoning to ensure the information is not only retrieved but also interpreted correctly.

Why It Matters Today

- Information changes rapidly

- AI must stay up-to-date

- Enterprises need accuracy, not guesswork

- Search experiences require personalization

- Users want reliability, transparency, and speed

RAG 2.0 is specifically designed to meet these expectations by connecting search with intelligent generation.

Latest RAG 2.0 Insights: Data-Backed Trends You Shouldn’t Miss

- A 2024 report from Contextual AI stated that their “CLM” models built on RAG 2.0 significantly outperformed older RAG systems and even strong baselines based on GPT‑4 on benchmarks such as Natural Questions, HotpotQA and TriviaQA.

- According to research published in 2025, citation-accuracy for generative AI systems was measured at roughly 74%, with RAG-style post-processing methods improving that figure by ~15%—highlighting the gains in reliability RAG architectures deliver.

- An industry article in April 2025 described how real-time RAG workflows (web scraping + streaming data) are being used for tasks such as market monitoring and breaking-news summaries—demonstrating that RAG 2.0 is no longer just experimental but deployed in live, time-sensitive scenarios.

How RAG 2.0 Works: The Clear Breakdown

RAG 2.0 works by combining smart retrieval with intelligent generation, ensuring every answer is accurate, contextual, and grounded in real data. Below is a simple explanation of how each step works, written in a mix of short paragraphs and supportive bullet points for easy reading.

A. Understanding the User’s Query

RAG 2.0 starts by deeply analyzing the user’s question. It doesn’t just look at keywords—it interprets intent, context, and the type of information required.

Key actions include:

- Detecting the purpose behind the query

- Understanding tone and depth needed

- Identifying whether the user wants facts, explanations, or insights

B. Retrieving the Most Relevant Information

Once the model understands the query, it searches through databases, documents, or external sources to collect the most useful information.

It retrieves using:

- Vector search for meaning-based matches

- Semantic matching to find contextually relevant data

- Multi-hop retrieval to connect related pieces of information

C. Filtering and Ranking Retrieved Data

Not all retrieved information is equally meaningful. RAG 2.0 filters out noise and keeps only the highest-quality evidence.

The system filters by:

- Removing outdated or irrelevant content

- Ranking documents by trust and freshness

- Prioritizing sources aligned with the query’s intent

D. Generating Evidence-Grounded Answers

After selecting the best information, the AI generates a response based directly on the retrieved evidence, ensuring factual accuracy.

Benefits of this step:

- Greatly reduced hallucinations

- Clear, concise explanations

- Answers supported by real-world data

E. Adapting Output in Real Time

Finally, RAG 2.0 adjusts the tone, depth, and structure of the answer based on user needs and the conversation flow.

This includes:

- Tailoring responses to user preferences

- Offering summaries, detailed explanations, or step-by-step guides

- Updating answers instantly when new information becomes available

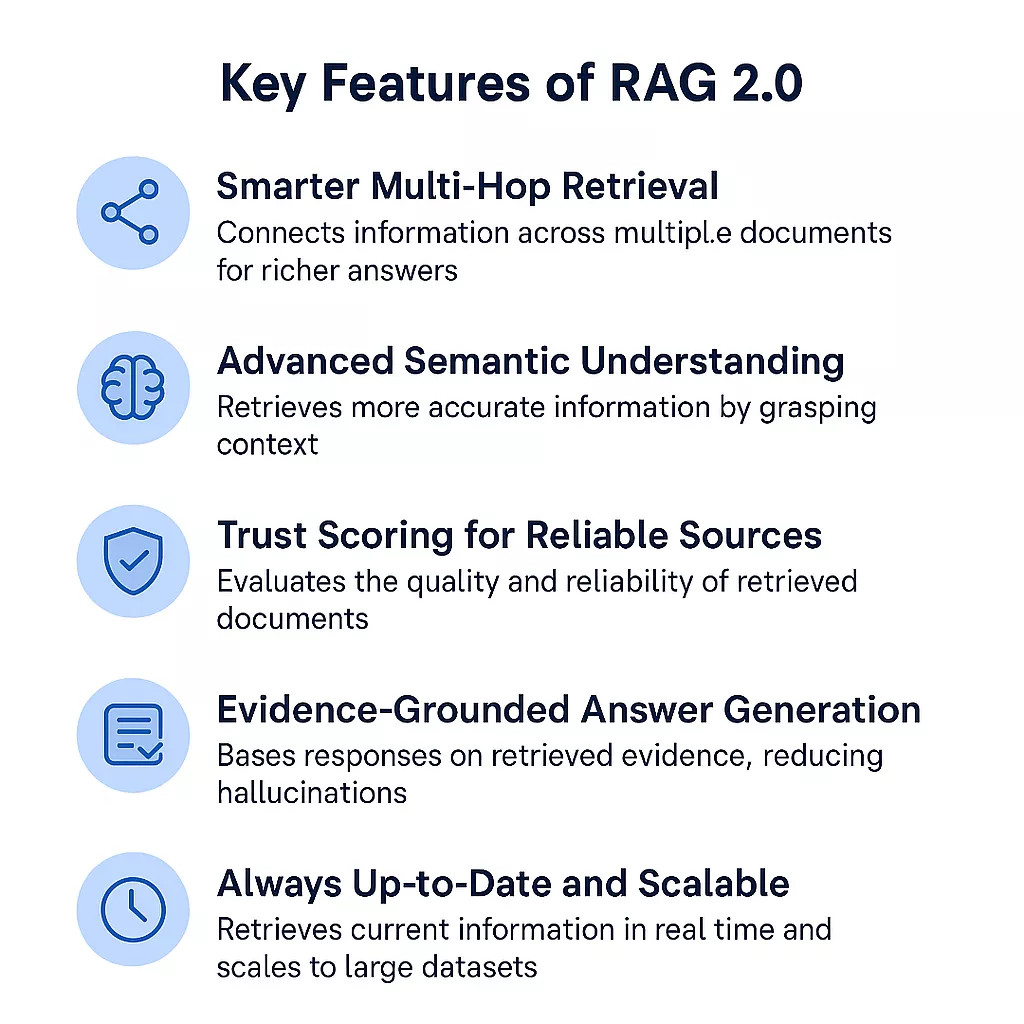

Key Features of RAG 2.0

RAG 2.0 introduces a smarter and more reliable approach to AI search by combining intelligent retrieval, deep context understanding, and evidence-based answer generation. Unlike traditional AI models that depend only on training data, RAG 2.0 actively pulls relevant information in real time and uses it to produce accurate, grounded responses. Below are the key features explained simply, with a mix of easy paragraphs and supportive bullet points.

1. Smarter Multi-Hop Retrieval

RAG 2.0 can connect information from multiple documents instead of relying on a single source. This gives it a deeper and more complete understanding of complex topics.

Highlights:

- Links related documents

- Builds multi-step reasoning

- Delivers richer, clearer answers

2. Advanced Semantic Understanding

It understands the real meaning behind your query — not just keywords. This helps RAG 2.0 retrieve more accurate and contextually relevant information.

Benefits:

- Better intent detection

- Accurate search results

- Handles ambiguous questions

3. Trust Scoring for Reliable Sources

RAG 2.0 checks the reliability, recency, and quality of every document before using it. This ensures answers are built on strong, trustworthy data.

Improves:

- Accuracy

- Credibility

- Misinformation reduction

4. Evidence-Grounded Answer Generation

Unlike traditional AI, RAG 2.0 bases every response on retrieved evidence rather than assumptions. This dramatically reduces hallucinations.

Why it matters:

- Verifiable answers

- Higher factual correctness

- Safe for critical domains

5. Always Up-to-Date and Scalable

RAG 2.0 retrieves information in real time, so responses always reflect the latest knowledge. It also scales easily across millions of documents.

Strengths:

- Fresh information

- Enterprise-ready

- Works across huge datasets

6. Highly Scalable for Enterprises

RAG 2.0 easily handles millions of documents, making it ideal for enterprises with extensive knowledge bases.

Strengths:

- Fast search across large datasets

- Works smoothly with internal company documents

- Supports multi-team or multi-department workflows

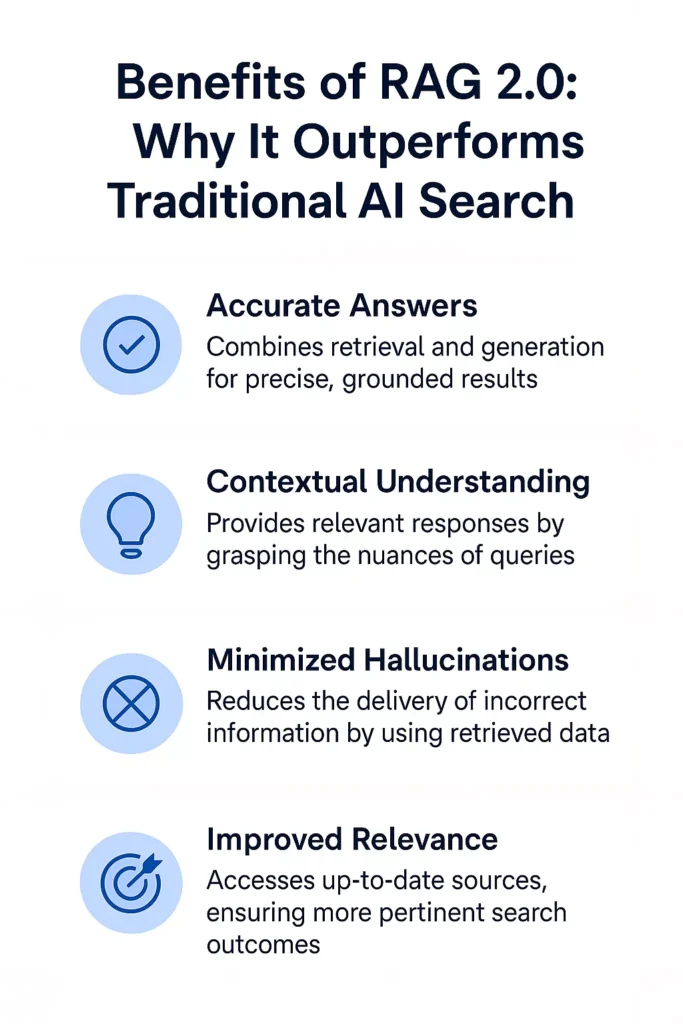

Benefits of RAG 2.0: Why It Outperforms Traditional AI Search

RAG 2.0 stands out because it merges the power of retrieval-based search with the intelligence of advanced language models, creating a hybrid system that is far more reliable and precise than traditional AI search methods. Traditional LLMs generate answers based only on pre-trained data, which can be outdated or incomplete. Search engines, on the other hand, return lists of links without offering clear synthesized answers. RAG 2.0 solves both issues by grounding every response in real, retrieved, and contextually relevant information. Below are the key benefits, explained in-depth.

1. Highly Accurate, Evidence-Based Responses

One of the most powerful advantages of RAG 2.0 is its ability to produce factual, grounded answers.

Traditional AI models may generate plausible but incorrect information because they rely only on patterns learned during training. RAG 2.0 eliminates this weakness by retrieving real documents before generating output.

Why this is better:

- It reduces hallucinations dramatically.

- Answers are backed by verifiable sources.

- The system uses up-to-date information rather than outdated model memory.

For users, this means cleaner, more reliable insights, especially in domains like healthcare, finance, legal, or research.

2. Deep Personalization and Context Awareness

Unlike classic search tools that treat every user the same, RAG 2.0 can adapt to the specific needs and context of each query.

It understands nuances, intent, and user history, making answers more precise and tailored.

How personalization works:

- The system remembers previous interactions.

- It adjusts retrieval depth based on query difficulty.

- It prioritizes sources based on what the user finds most useful.

This creates an AI search experience that feels more like talking to a smart expert who knows your style and needs.

3. Always Up-to-Date Information Retrieval

Traditional LLMs can become outdated because they are trained on fixed datasets. Search engines may surface old content.

RAG 2.0 solves both issues by retrieving the latest available data right at the moment of the query.

Benefits:

- Perfect for domains where accuracy changes daily (tech updates, regulations, stock data).

- Ensures freshness of information without retraining the model.

- Lets organizations integrate their current internal documents directly.

This ability to stay updated makes RAG 2.0 far more trustworthy and practical for real-world usage.

4. Faster and More Informed Decision-Making

RAG 2.0 doesn’t just show information—it synthesizes it into clear, actionable insights.

Users don’t need to scroll through pages of links or manually compare documents. The system does it automatically.

Why this matters:

- Reduces time spent on research.

- Helps executives, analysts, and researchers get instant clarity.

- Turns complex information into understandable summaries.

This makes RAG 2.0 a powerful productivity booster for professional and enterprise environments.

5. Transparent and Trustworthy Answers

A major problem in traditional AI search is the lack of clarity about where information comes from.

RAG 2.0 fixes this by linking its answers to the underlying sources.

Trust improvements:

- Users can verify claims by checking referenced documents.

- Enterprises gain confidence that internal policies and data are reflected accurately.

- AI output becomes auditable, making it safer for compliance-heavy industries.

Transparency builds trust—not just in the system, but in the decisions made with its help.

6. Significantly Reduced Hallucinations

Hallucinations occur when AI generates confident but false information.

This is one of the biggest weaknesses of traditional LLMs.

RAG 2.0 reduces hallucinations because every answer is grounded in retrieved evidence, not assumptions or guesses.

Impact:

- More consistent accuracy across different topics.

- Less risk in high-stakes decision-making.

- Improved overall reliability of AI systems.

By grounding generation in real data, RAG 2.0 becomes far safer and more dependable.

7. Domain Flexibility and High Adaptability

RAG 2.0 can adapt to any industry or specialized field by simply adjusting the retrieval sources.

Examples:

- Legal databases for legal research

- Medical studies for healthcare

- Technical documentation for software developers

- Academic journals for researchers

- eCommerce catalogs for product search

Unlike traditional AI—which needs retraining—RAG 2.0 can switch contexts instantly by pulling from different data sources.

8. Scalable for Enterprises and Large Knowledge Systems

RAG 2.0 is designed for wide-scale use across organizations with massive data repositories.

Enterprise advantages:

- Seamless integration with knowledge bases.

- Can handle millions of documents and files.

- Supports role-based access for secure information retrieval.

This makes it ideal for companies that need accurate AI assistance across teams, departments, and use cases.

9. Combines Search + AI: A Powerful Hybrid Solution

Traditional AI search either:

- Relies only on generation (leading to hallucination), or

- Acts like a normal search engine (showing links instead of answers).

RAG 2.0 merges the two perfectly.

Outcome:

- Search becomes conversational, not mechanical.

- Generative AI becomes trustworthy, not imaginative.

- Users get the best of both precision and clarity.

This hybrid approach is what makes RAG 2.0 uniquely powerful compared to any previous search model.

10. Delivers Human-Like Readability With Factual Accuracy

Older AI models generated robotic or generic responses.

Search engines delivered raw data that required interpretation.

RAG 2.0 elevates the experience by combining:

- Natural, human-like language

- Concise summaries

- Grounded facts

- Contextual insights

Users get answers that are not only correct but also easy to read, understand, and act on.

RAG 2.0 vs. RAG 1.0: What’s New and Improved

| Feature | RAG 1.0 | RAG 2.0 |

|---|---|---|

| Retrieval Type | Basic Vector Search | Multi-hop, context-aware retrieval |

| Accuracy | Moderate | High and evidence-grounded |

| Context Handling | Limited | Deep contextual linking |

| Source Filtering | Minimal | Intelligent trust scoring |

| Output Quality | Decent | Clear, coherent, and highly reliable |

| Hallucinations | Still present | Significantly reduced |

RAG 2.0 is not just an upgrade—it’s a redesigned framework that merges search and AI generation in a smarter, more efficient way.

Real-World Applications of RAG 2.0

RAG 2.0 is already transforming how businesses, researchers, and users interact with information. Its ability to retrieve accurate data and generate clear, grounded responses makes it extremely valuable across industries.

a. Enterprise Knowledge Management

RAG 2.0 helps companies instantly search through internal documents, policies, reports, and knowledge bases. Employees no longer waste hours digging through files — the system pulls the most relevant information and summarizes it clearly.

Use cases:

- Internal document search

- Policy lookup

- Employee onboarding assistants

b. Customer Support Automation

AI support bots powered by RAG 2.0 can answer user questions using real company data, manuals, and FAQs — ensuring accurate, consistent responses.

Use cases:

- Chatbots that provide correct information

- Automated troubleshooting guides

- Faster ticket resolution

c. Healthcare, Legal, and Research Assistance

In specialized fields, accuracy matters. RAG 2.0 pulls verified information from medical studies, legal documents, and research papers to deliver reliable insights.

Use cases:

- Medical research assistants

- Legal case summarization

- Scientific literature reviews

d. Developer and Technical Support Tools

Developers use RAG 2.0 to search codebases, logs, and documentation with high precision. It can explain errors, suggest fixes, and summarize complex technical info.

Use cases:

- Code search assistants

- DevOps troubleshooting

- Documentation Q&A tools

Why RAG 2.0 Is the Future of AI Search

RAG 2.0 is more than a model—it’s a system that brings together the real world and generative intelligence. As AI continues to evolve, users will demand:

- Transparency

- Accuracy

- Evidence-backed answers

- Personalization

- Real-time updates

RAG 2.0 is perfectly built to meet these demands.

The shift from traditional search engines to AI-centric search experiences is accelerating, and RAG 2.0 is positioned at the core of this transformation.

How RAG 2.0 Enhances User Experience

1. Natural, Human-Like Responses

RAG 2.0 transforms the search experience by giving answers that feel conversational and human, rather than mechanical or overloaded with unnecessary details. Instead of overwhelming users with a long list of links, documents, or irrelevant text, it crafts a clean, direct explanation based on real retrieved information. This makes interactions smoother and more intuitive, especially for beginners who want straightforward guidance. The tone is natural, the flow is easy to follow, and the response feels like it’s coming from a knowledgeable assistant who understands what you’re looking for and explains it in the simplest, most helpful way.

2. Clarity Over Complexity

One of RAG 2.0’s biggest strengths is its ability to turn complicated topics into clear, understandable explanations. It retrieves accurate information, filters out the noise, and then delivers a simplified explanation without losing the core meaning. Whether a user is asking about technical concepts, scientific ideas, or business processes, RAG 2.0 focuses on giving clarity rather than overwhelming them with jargon. This makes learning new topics easier, reduces confusion, and ensures that even complex subjects feel more approachable. Users get the exact information they need—well organized, easy to digest, and written in a way that makes sense instantly.

3. Actionable Insights

RAG 2.0 goes beyond basic information by offering insights users can actually apply. If someone asks for a product comparison, it highlights the meaningful differences. If it’s a technical question, it summarizes solutions or steps. For business queries, it extracts patterns, opportunities, or recommendations. Instead of just presenting raw data, RAG 2.0 interprets it and turns it into practical, useful takeaways. This makes decision-making faster and more confident because users receive not just knowledge, but guidance. The model connects the dots and presents information in a way that empowers users to act, solve problems, or move forward with clarity.

4. Personalized Context

RAG 2.0 becomes more helpful over time by learning from user behavior, preferences, and previous queries. It tailors answers based on what a user has asked before, what style of explanation they prefer, and what type of information is most useful to them. This personalization makes the experience feel more intuitive and relevant. Instead of giving generic responses, it adapts its retrieval and tone to match each user’s needs—whether they want quick summaries, detailed breakdowns, or beginner-friendly explanations. The result is a smarter, more connected, and more meaningful interaction that feels uniquely suited to every user.

Challenges and Future Improvements

Even though RAG 2.0 is a major leap forward, it still faces a few challenges that need refinement as the technology evolves.

• Retrieval Bias

RAG 2.0 is only as strong as the information it pulls from. If the sources are outdated, biased, or incomplete, the final answer can be affected. Ensuring high-quality, diverse data remains a key challenge.

• Handling Massive Databases

Searching millions of documents at high speed requires powerful indexing and computing resources. As datasets grow, maintaining fast and accurate retrieval becomes more demanding.

• Domain-Specific Adaptation

Fields like healthcare, law, and scientific research require specialized knowledge. RAG 2.0 sometimes needs custom tuning to fully understand terminology, rules, and context unique to each industry.

• Real-Time Verification

In fast-changing areas—such as tech updates, market trends, or medical guidelines—keeping information fresh and factually correct is still a work in progress. Real-time validation remains an evolving capability.

What the Future Looks Like: RAG 3.0 and Beyond

Future versions of RAG—often called RAG 3.0 or Unified Retrieval Models—aim to expand capabilities even further by introducing:

- Multi-modal retrieval (support for images, PDFs, charts, audio, and video)

- Autonomous context expansion, where AI automatically finds related information

- Stronger built-in fact-checking to reduce errors even more

- Domain-tuned reasoning, offering expert-level insights for specialized industries

These advancements will make RAG systems smarter, more accurate, and even more aligned with real-world needs.

Conclusion

RAG 2.0 represents the next phase of AI search—one that is smarter, more accurate, context-aware, and aligned with real-world information needs.

By grounding answers in verified data while maintaining the natural fluency of modern language models, it delivers a powerful hybrid approach that benefits individuals, enterprises, and entire industries.

From improving customer support to assisting researchers and powering smarter search engines, RAG 2.0 is shaping the future of trustworthy, transparent AI-driven information access.

If you’re building any AI system today, RAG 2.0 is no longer an option—it’s the new standard.

FAQs

1. What makes RAG 2.0 different from traditional AI models?

RAG 2.0 doesn’t rely only on pre-trained knowledge. It retrieves real-time, relevant information from trusted sources and then generates answers based on that evidence. This results in more accurate, reliable, and context-aware responses compared to standard AI models.

2. Does RAG 2.0 completely eliminate hallucinations?

While no AI system can eliminate hallucinations entirely, RAG 2.0 drastically reduces them by grounding outputs in verified data. By using retrieval-based evidence, it minimizes guesswork and increases factual accuracy.

3. Can RAG 2.0 be used in enterprise environments?

Yes. RAG 2.0 is designed for large-scale enterprise use. It can search millions of internal documents, knowledge bases, and reports while delivering grounded answers suited for business operations, customer support, analytics, and compliance-heavy industries.

4. How does RAG 2.0 stay up-to-date with new information?

RAG 2.0 retrieves information at the time of the query, meaning it always accesses the latest available sources. Unlike traditional models that require retraining, RAG 2.0 stays current using dynamic retrieval systems.

5. Where is RAG 2.0 most useful in real-world applications?

RAG 2.0 is widely used in customer support, enterprise search, healthcare research, legal document analysis, education tools, and technical troubleshooting. Any domain that relies on accurate, up-to-date information benefits from RAG 2.0’s grounded approach.