Neuro-Symbolic AI is a hybrid artificial intelligence system that combines the learning capabilities of neural networks (Deep Learning) with the reasoning power of symbolic logic. While neural networks excel at recognizing patterns in unstructured data like images and text, they often operate as “black boxes” that cannot explain their decisions. Symbolic AI, conversely, uses clear, human-readable rules but struggles with messy real-world data. By fusing these two paradigms, Neuro-Symbolic AI creates systems that are both highly accurate and transparent, offering the “best of both worlds”: the ability to learn from data and the capacity to reason, explain, and adhere to logical constraints. This explainability is the missing link needed to trust AI in high-stakes fields like healthcare, finance, and autonomous driving.

The “Black Box” Problem: Why Deep Learning Isn’t Enough

For the past decade, Deep Learning (DL) has been the undisputed king of AI. It powers the facial recognition on your phone, the recommendations on your streaming service, and the generative text of chatbots. However, despite its brilliance, Deep Learning suffers from a critical flaw: opacity.

Imagine a Deep Learning model as a brilliant but silent savant. If you show it an X-ray, it might instantly detect a rare disease with 99% accuracy. But if a doctor asks, “Why do you think it’s cancer?”, the model cannot answer. It doesn’t “know” medical rules; it only knows that a specific arrangement of pixels mathematically correlates with a label in its training data.

This “Black Box” nature creates three massive hurdles for widespread AI adoption:

- Lack of Trust: In law or medicine, “I guess so” isn’t a valid legal defense.

- Data Hunger: DL models require millions of examples to learn simple concepts that a human child learns in seconds.

- Fragility: Neural networks can be easily fooled. A few altered pixels can make a DL model mistake a stop sign for a speed limit sign—a potentially fatal error in autonomous driving.

We don’t just need AI that works; we need AI that understands. That is where Neuro-Symbolic AI enters the frame.

What Is Neuro-Symbolic AI? The Hybrid Engine

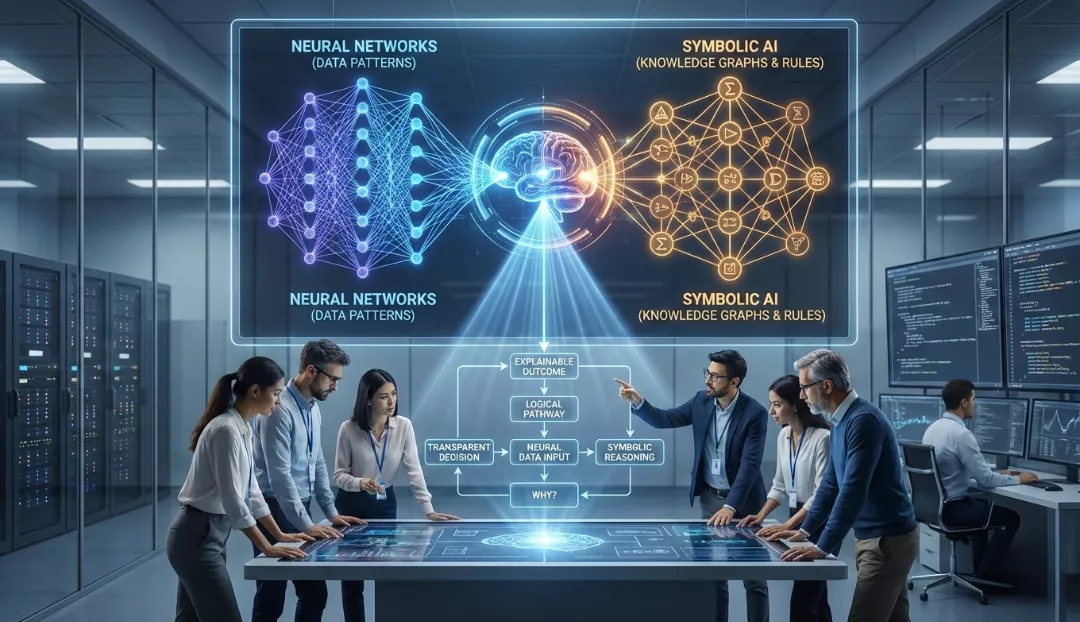

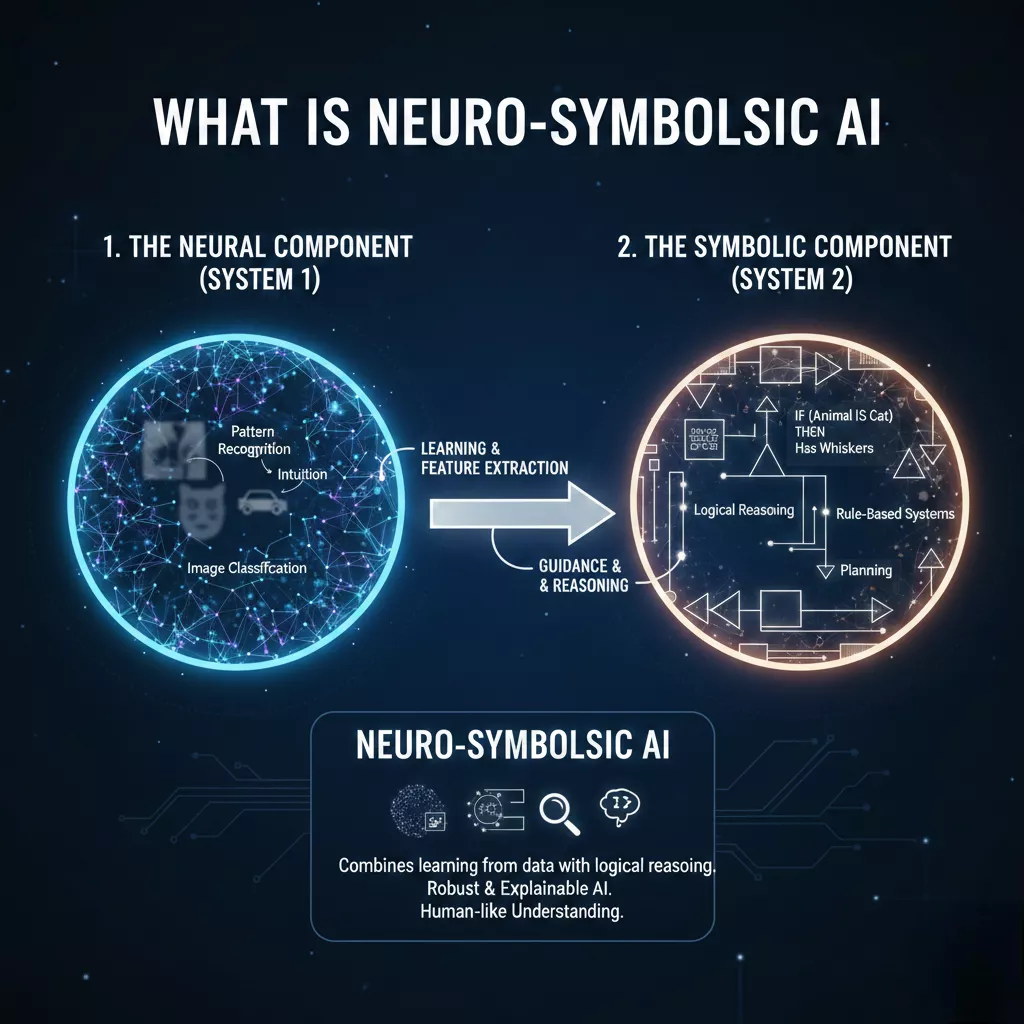

Neuro-Symbolic AI (often abbreviated as NeSy) is not a single algorithm, but an architectural philosophy. It integrates two distinct forms of intelligence:

1. The Neural Component (System 1)

Think of this as the AI’s “eyes and ears.” It uses deep neural networks to perceive the world. It handles noisy, unstructured data—pixels in a video, sound waves in audio, or syntax in raw text—and converts them into symbolic representations. It is fast, intuitive, and pattern-based.

2. The Symbolic Component (System 2)

Think of this as the AI’s “brain.” It uses logic, knowledge graphs, and rules to reason about the symbols provided by the neural network. It performs deduction, planning, and verification. It is slow, deliberate, and logic-based.

How They Work Together

In a Neuro-Symbolic system, the neural network might look at a picture and identify objects: “There is a Red Cube and a Blue Cylinder.” It passes these symbols to the logic component, which can then answer complex questions like, “Is the Red Cube to the left of the Blue Cylinder?” based on spatial reasoning rules, rather than just guessing.

This architecture mimics human cognition. As formulated by psychologist Daniel Kahneman, humans have System 1 (fast, instinctive thinking) and System 2 (slow, logical reasoning). Neuro-Symbolic AI attempts to replicate this duality in silicon.

Key Benefits: Why It Is the Future

The shift toward Neuro-Symbolic AI is driven by necessity. Pure connectionism (Deep Learning) is hitting a ceiling in terms of return on compute and data. Here is why NeSy is the next evolutionary step:

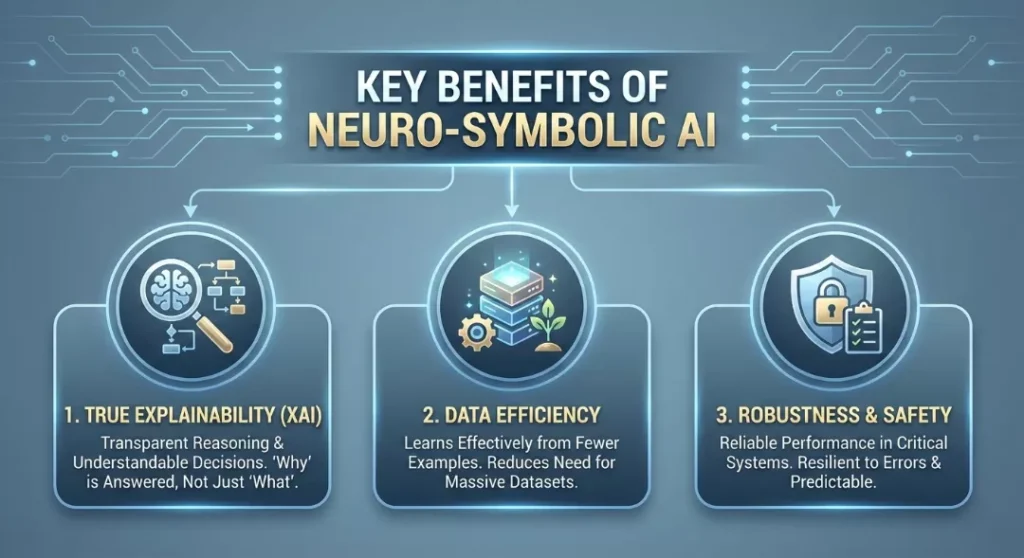

1. True Explainability (XAI)

Unlike “post-hoc” explainability methods (which try to guess why a neural net made a decision after the fact), Neuro-Symbolic AI is interpretable by design. The reasoning process is explicit. If a NeSy system denies a loan, it can point to the exact logical rule that was violated (e.g., “Applicant debt-to-income ratio > 40%”), even if the risk assessment of the applicant’s spending habits came from a neural network.

2. Data Efficiency

Deep Learning models are notoriously data-inefficient. They need to see thousands of cats to recognize a cat. A Neuro-Symbolic system can be “taught” a concept via a rule. If you tell the system “A zebra is like a horse but with stripes,” it doesn’t need 10,000 images of zebras to understand. It combines its visual representation of a “horse” with the logical concept of “stripes.”

3. Robustness and Safety

You can hard-code safety constraints into a Neuro-Symbolic system. For a self-driving car, you can embed a symbolic rule: “Never cross a double yellow line if an oncoming vehicle is detected.” A pure neural network might learn this probabilistically (and might fail 0.1% of the time), but a symbolic module treats it as an inviolable law, drastically increasing safety.

Comparative Analysis: Deep Learning vs. Symbolic vs. Neuro-Symbolic

To truly understand the leap forward, we must compare the three approaches side-by-side.

| Feature | Deep Learning (Neural) | Symbolic AI (GOFAI) | Neuro-Symbolic AI |

| Core Mechanism | Pattern recognition, statistical correlation. | Logic, rules, knowledge graphs. | Hybrid: Neural perception + Logical reasoning. |

| Data Requirement | Massive (Big Data). | Low (requires manual knowledge entry). | Moderate (learns with less data). |

| Explainability | Low (Black Box). | High (Clear decision trees). | High (Traceable reasoning). |

| Generalization | Poor (struggles outside training distribution). | Poor (cannot handle noise/unseen data). | Strong (logic applies universally). |

| Reasoning | Intuitive/Probabilistic. | Deductive/Deterministic. | Combined (Probabilistic Logic). |

| Best Use Case | Image recognition, NLP, Gaming. | Math solvers, Tax software. | Healthcare, Robotics, Legal Tech. |

Real-World Applications and Market Impact

The Neuro-Symbolic AI market is poised for explosive growth. According to 2025/2026 market analysis, the sector is projected to reach over $6.3 billion by 2030, growing at a CAGR of roughly 31%.

1. Healthcare: The High-Stakes Frontier

In 2025, we saw the first major deployments of NeSy systems in clinical settings. Pure DL models often hallucinate in medical reports. Neuro-Symbolic systems use a neural network to scan medical imagery (CT scans, MRIs) and a symbolic reasoning engine to verify the findings against medical ontologies (like SNOMED CT).

- Example: A system detects a “mass” in a lung scan (Neural). The symbolic layer checks the patient’s history and medical rules: “If the patient is a non-smoker under 30 with no family history, probability of malignancy is lower; check for infection markers first.”

- Impact: Early pilots have shown a reduction in diagnostic errors by up to 35% compared to pure DL models.

2. Finance: Compliance and Fraud Detection

Financial institutions are under heavy regulatory pressure to explain their algorithms. “The computer said no” is not acceptable to regulators investigating bias.

- Application: NeSy systems are used for Anti-Money Laundering (AML). Neural networks spot complex, non-linear patterns of transaction fraud that humans miss. The symbolic layer then filters these flags through regulatory rules to ensure the “fraud” isn’t just a wealthy client buying a house (a false positive).

- Result: This reduces the manual workload for compliance officers and provides an audit trail for every flagged transaction.

3. Robotics and Autonomous Systems

Robots need to interact with the physical world (Neural) but follow strict safety protocols (Symbolic).

- Example: A warehouse robot uses vision to navigate (Neural). However, it operates under symbolic constraints: “Do not enter Zone B if humans are present.” Even if the neural network “thinks” the path is clear, the symbolic override prevents the robot from entering if it detects a human signal, preventing accidents.

The Technical “How”: Recent Breakthroughs (2024-2026)

Several technical frameworks have emerged that make this integration possible. It is no longer just theory; it is code.

Logic Tensor Networks (LTN)

LTNs allow logic to be part of the loss function in a neural network. During training, the network is punished not just for getting the wrong label, but for violating a logical rule. This forces the neural network to learn representations that are consistent with known logic.

Neural Theorem Provers

These are systems designed to prove mathematical or logical theorems using neural guidance. They use the intuition of a neural net to select which proof path to explore, but the rigor of a symbolic system to verify the proof. This is revolutionizing formal verification in software code.

Concept Bottleneck Models

This architecture forces the neural network to predict high-level concepts (e.g., “Wing,” “Beak,” “Red”) before making a final prediction (“Cardinal”). The final decision is a linear (symbolic) combination of these concepts. If the model makes a mistake, a human can intervene and say, “No, that’s not a beak,” and the model corrects itself instantly—something impossible in standard Deep Learning.

Challenges to Adoption

Despite the promise, Neuro-Symbolic AI is not a magic wand. It faces significant hurdles:

- The “Grounding” Problem: How do you map a fuzzy pixel representation of a “chair” to the distinct logical symbol of a “chair”? Bridging the continuous world of vectors and the discrete world of symbols is mathematically difficult.

- Computational Cost: Running two systems (neural and symbolic) simultaneously can be computationally expensive. Symbolic search spaces can grow exponentially (combinatorial explosion), making them slow without optimization.

- Talent Gap: The industry has spent 10 years training data scientists in Deep Learning. Few possess the dual expertise in both neural networks and formal logic/knowledge representation needed to build NeSy systems.

Future Outlook: The Road to AGI?

Many experts, including AI pioneers like Gary Marcus and researchers at IBM and DeepMind, believe Neuro-Symbolic AI is the necessary path toward Artificial General Intelligence (AGI).

Current Large Language Models (LLMs) like GPT-5 (hypothetically speaking, or the latest 2026 iterations) are impressive, but they still hallucinate. They are statistical parrots, not logical thinkers. To achieve an AI that can reason, plan, and understand cause-and-effect like a human, we cannot rely on bigger datasets alone. We need the structure of logic.

Trends to Watch in 2026:

- Neuro-Symbolic Agents: We are moving from chatbots to “Agents“—AI that can take action on your behalf (e.g., “Plan my travel and book the tickets”). These require the planning capabilities of symbolic AI to ensure actions are executed in the correct sequence.

- Small Data Revolution: As the internet runs out of high-quality training data, the ability of NeSy systems to learn from fewer examples will become their killer feature.

Conclusion

Neuro-Symbolic AI represents the maturation of Artificial Intelligence. We are moving past the “wild west” era of black-box Deep Learning, where we accepted high accuracy at the cost of understanding. The future belongs to systems that are accountable, transparent, and logical.

By marrying the learning speed of neural networks with the reasoning power of logic, Neuro-Symbolic AI is not just making machines smarter; it is making them trustworthy. For a doctor relying on an AI diagnosis, a bank manager approving a loan, or a passenger in a self-driving car, that difference changes everything.