Artificial Intelligence is becoming a part of almost everything we do, from how we shop to how we receive healthcare. But as AI systems grow more powerful, the biggest question we must answer is simple: Can we trust AI to be fair, safe, and aligned with human values? Ethics in AI—covering bias, privacy, and responsible development—is essential because it ensures that technology supports people rather than harming them. Without ethical foundations, even the smartest AI can produce dangerous or unfair outcomes. This article explains why ethical AI matters and how these principles shape a safer digital future.

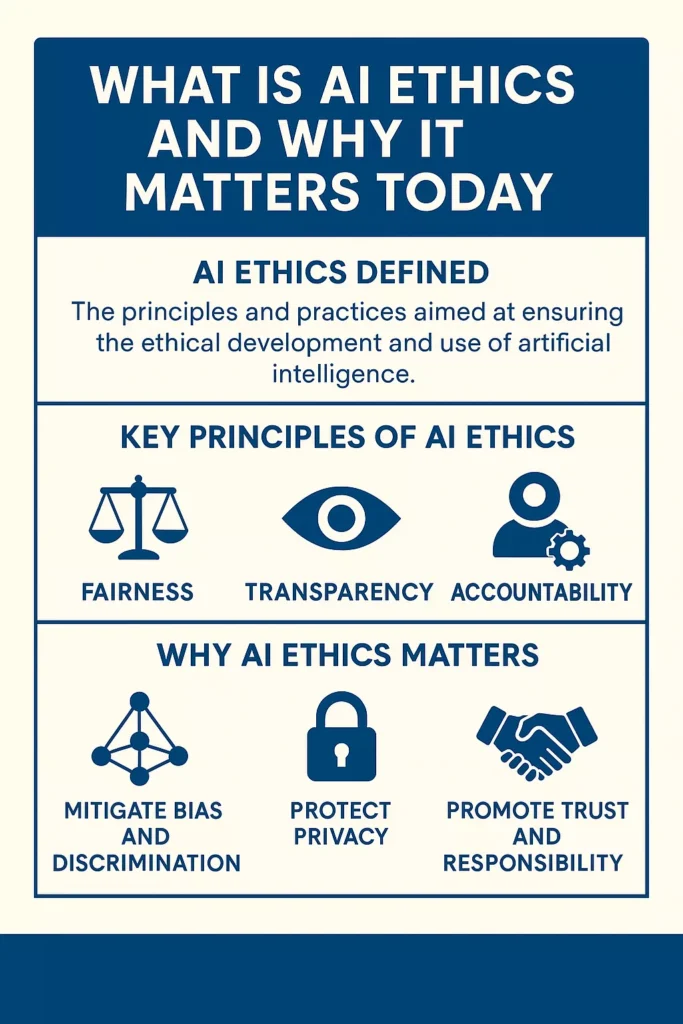

What Is AI Ethics and Why It Matters Today

Artificial Intelligence ethics refers to the principles and guidelines that govern how AI is designed, trained, deployed, and monitored. These principles ensure AI systems remain:

- Fair

- Transparent

- Privacy-preserving

- Safe

- Accountable

- Beneficial to society

As AI integrates into hiring, policing, healthcare, education, finance, and everyday online experiences, ethical gaps can translate into real-world harm. AI that recommends who gets a loan, who may receive medical care, or who police should investigate must work fairly and responsibly.

Why AI Ethics Is No Longer Optional

- AI influences decisions about millions of people daily.

- Bad data or flawed design can reinforce stereotypes.

- User data is becoming more sensitive and abundant.

- Governments are introducing strict AI regulations.

- Companies risk losing trust, reputation, and revenue without ethical practices.

Ethics is now a necessity—not a luxury—for sustainable AI innovation.

AI and Privacy: Why Data Protection Is at the Heart of Ethical AI

AI systems rely on data to function—and often, that data comes from real people. Ethical AI development must address how this data is collected, processed, stored, shared, and deleted.

Why Privacy in AI Is a Growing Concern

Today’s AI models may access:

- Personal information

- Browsing history

- Medical data

- Facial images

- Location data

- Conversations from apps or chatbots

Misuse, over-collection, or weak security can lead to serious privacy risks.

Top Privacy Risks in AI

Artificial Intelligence has transformed how we interact with technology, but with this power comes a serious challenge: protecting user privacy. AI models learn from data—sometimes extremely sensitive data—which introduces multiple risks if handled irresponsibly. Below is a deeper, clearer, more reader-friendly breakdown of the major privacy threats AI poses today.

1. Excessive Data Collection (The “More Than Needed” Problem)

Many AI systems collect far more data than required to perform their tasks. This often happens because developers believe “more data = better performance,” leading to overcollection.

Why This Is a Problem

- It increases the chances of sensitive information being exposed.

- Companies can misuse or repurpose the data later without consent.

- It becomes difficult for users to control what is stored about them.

Real-World Example

A fitness app collecting location data every second—even when you’re not using it—just to improve its AI recommendations.

User Impact

You lose control over what companies know about your life, habits, and daily movements.

2. Lack of Transparency About Data Usage

AI systems often operate like black boxes. Users upload data, but they rarely know:

- What data is collected

- Who has access

- How long it is stored

- Whether it is shared with third parties

- If AI models continue using it after you delete your account

Why It’s Dangerous

Without clarity, users cannot make informed decisions about their privacy.

User Impact

You may unknowingly consent to your photos, voice, or personal messages being used to train large-scale AI models.

3. Data Breaches & Cybersecurity Weaknesses

AI systems store massive databases that attract hackers. The larger the dataset, the bigger the target.

Risks Include:

- Identity theft

- Exposure of medical or financial data

- Blackmail using personal content

- Selling of personal information on dark markets

Why AI Increases Breach Risk

AI models may require centralized data storage, which becomes a “single point of failure.”

User Impact

Your personal life could end up in the hands of criminals even if you never directly interacted with the hackers.

4. Facial Recognition & AI Surveillance

One of the most controversial uses of AI is facial recognition. When combined with public cameras, drones, and social media images, AI can track individuals without consent.

Privacy Threats

- Mass surveillance

- Tracking movements, habits, and relationships

- Identifying people in private locations

- Government misuse

- Unauthorized cross-matching (e.g., using your Instagram photo to identify you on the street)

User Impact

You lose the right to move freely without being watched, analyzed, or recorded.

5. Data Retention Without Consent (AI Never “Forgets”)

Some AI systems continue holding user data indefinitely—even when you delete your account or request removal.

Why It Happens

Once data is used to train a model, it becomes deeply embedded in the system. Removing it isn’t always simple.

Risks

- Old or deleted data may resurface

- Companies may still profit from your information

- Sensitive content remains permanently stored inside AI models

User Impact

You lose control over your digital footprint forever.

6. Model Inversion Attacks (Reverse Engineering Your Data)

This is one of the most advanced and scary privacy risks.

Hackers can sometimes reverse-engineer an AI model to extract:

- Training images

- Personal text

- Voice samples

- Medical records

- Identifiable patterns

Why It’s Dangerous

Even if the original dataset is secure, the AI model itself may leak information.

User Impact

Your private data can be reconstructed from the model – even without hacking the database.

7. AI Predicting Personal Information You Never Provided

AI can infer things about you that you never explicitly shared, such as:

- Your age

- Political views

- Relationships

- Health conditions

- Socioeconomic status

- Emotions

- Lifestyle habits

How This Happens

AI detects patterns across your behavior, language, browsing habits, and interactions.

Risks

- Unwanted profiling

- Targeted advertising

- Manipulation

- Psychological exploitation

User Impact

AI may know more about you than your friends, family, or even yourself.

8. Data Sharing With Third Parties (Without Clear Permission)

Companies often share or sell data to:

- Advertising agencies

- Business partners

- Data brokers

- Analytics companies

- AI training labs

Why It’s Risky

Once your data leaves the first company, you lose control completely.

User Impact

Your personal information circulates across multiple organizations without your knowledge.

9. Shadow Data (You Don’t Know It Exists)

Shadow data refers to data collected without your awareness or stored in systems you never interacted with.

Examples include:

- AI training datasets scraped from the internet

- Deleted data stored in backups

- Metadata from your device

- Behavioral analytics

Why It Matters

You can’t control or delete what you don’t know exists.

User Impact

Unknown data trails increase the risk of misuse or exposure.

Responsible AI: Building Technology That Puts People First

As Artificial Intelligence becomes more integrated into everyday life—from banking and healthcare to hiring and education—the need for Responsible AI becomes more urgent. Responsible AI means building, training, and deploying systems that align with human values, respect ethical boundaries, and prioritize fairness, safety, and transparency.

Core Principles of Responsible AI

Below are the seven foundational principles that guide Responsible AI. Each principle ensures that AI systems operate ethically, safely, and inclusively.

A. Fairness (AI Should Treat Everyone Equally)

AI must make decisions without discrimination, regardless of gender, age, race, income group, disability, religion, or geographic region.

Why Fairness Matters

Without fairness checks, AI can unintentionally:

- Reject qualified job applicants

- Misdiagnose certain patient groups

- Deny loans to those from minority backgrounds

- Misidentify individuals in facial recognition

How Fairness Is Ensured

- Using diverse, representative datasets

- Removing historical biases from data

- Testing models for discriminatory patterns

- Involving people from various communities during development

Fair AI is not just ethical—it’s essential for building public trust.

B. Transparency (Make AI Understandable, Not a Black Box)

Transparency means users should be able to understand:

- What data AI uses

- How it processes information

- Why it made a decision

Why Transparency Matters

When users can’t understand how AI thinks, trust disappears. Lack of clarity can lead to:

- Confusion

- Distrust

- Legal challenges

- Damaged reputation

Ways to Build Transparency

- Explainable AI (XAI) tools

- Clear documentation and model cards

- Easy-to-understand user summaries

- Disclosure when AI—not humans—is making a decision

Transparent systems empower users instead of confusing them.

C. Accountability (Who Is Responsible When Something Goes Wrong?)

For AI to be truly responsible, someone must be answerable for its decisions. Accountability ensures:

- Developers build carefully

- Companies don’t hide behind algorithms

- Regulators enforce safe AI practices

Forms of Accountability

- Legal responsibility for harmful outcomes

- Ethical guidelines within organizations

- External audits

- Clear ownership of AI decisions

Why Accountability Matters

Without accountability, harmful AI decisions can go unchallenged—and victims may have no path to justice.

D. Privacy Protection (Guarding User Data Like a Treasure)

AI systems often learn from personal data—your face, voice, behavior, location, conversations, and more. Responsible AI must protect this data at every stage.

Privacy Protection Includes:

- Collecting only necessary information

- Using secure storage and encryption

- Allowing users to control, access, or delete their data

- Ensuring data isn’t misused or sold

- Following privacy laws (GDPR, DPDP Act, CCPA, etc.)

Why It Matters

Without proper safeguards, AI can turn into a tool of surveillance or data exploitation.

Responsible AI respects user privacy as a core human right.

E. Safety & Reliability (AI Should Work Predictably and Safely)

AI must perform accurately and consistently, especially in high-risk areas like:

- Healthcare

- Transportation (self-driving cars)

- Banking

- Public safety

- Legal decisions

What Safety & Reliability Mean

- AI systems shouldn’t behave unpredictably

- They must handle real-world challenges

- They should have fail-safes to prevent harm

- They must be rigorously tested before deployment

Why It’s Critical

A small error in a medical AI system or automotive AI system could cost lives. Reliability ensures AI enhances human capabilities instead of endangering them.

F. Inclusiveness (AI for Everyone, Not Just a Few)

AI must work fairly for all types of users, including:

- Diverse ethnic groups

- People with disabilities

- Different age groups

- Non-native language speakers

- Underrepresented communities

What Inclusiveness Looks Like

- Designing accessible interfaces

- Training AI on global, multicultural data

- Avoiding stereotypes in datasets

- Testing AI on diverse user groups

Inclusiveness ensures no one is left behind in the AI-driven future.

G. Sustainability (AI Should Help the Planet, Not Harm It)

AI systems use enormous computational power—which means high energy consumption. Responsible AI includes designing systems that are environmentally conscious.

Sustainability Includes:

- Using efficient algorithms

- Reducing computational waste

- Creating low-power AI models

- Optimizing data centers

- Supporting long-term societal wellbeing

Why It Matters

AI should improve human life without harming the planet or draining global resources.

Why Responsible AI Matters More Than Ever

1. AI Now Drives High-Impact Decisions

Artificial intelligence is increasingly shaping critical decisions across sectors such as banking, policing, healthcare, education, government services, and hiring. Because these areas directly affect people’s lives, ensuring fairness, accuracy, and neutrality is essential.

2. Public Trust Depends on Ethical AI

If people believe AI is biased or unsafe, they simply won’t use it. Responsible AI practices build trust, making individuals and organizations confident in adopting AI-driven solutions.

3. Regulations Are Rapidly Expanding

Governments worldwide are implementing strict AI rules, including the EU AI Act, the U.S. AI Bill of Rights, India’s DPDP Bill, and emerging global AI safety standards. Businesses must comply with these frameworks to avoid fines, legal issues, and operational disruptions.

4. Long-Term Reputation Is at Stake

A single biased, unsafe, or poorly designed AI system can permanently damage an organization’s brand. Responsible AI ensures credibility and protects long-term public perception.

5. Ethical Principles Drive Sustainable Innovation

Innovation flourishes when built on strong, ethical foundations. Responsible AI helps organizations grow by promoting safe experimentation, reducing risks, and avoiding shortcuts that could lead to harm.

Examples of Ethical Challenges in AI

I. Autonomous Vehicles

Self-driving cars raise serious ethical concerns. Who should be held accountable during accidents—the manufacturer, the software developer, or the vehicle owner? Another dilemma is how the AI should prioritize lives in unavoidable crash scenarios.

II. Deepfakes

AI-generated fake videos and audio can spread misinformation, enable fraud, damage reputations, and even threaten political stability.

III. Generative AI Assistants

AI assistants may unintentionally leak sensitive data, generate harmful or misleading responses, or be manipulated into producing inappropriate content.

IV. AI in Healthcare

Biased training data or inaccurate predictions can lead to misdiagnosis, unequal treatment, or life-threatening errors for patients.

V. Social Media Algorithms

Recommendation algorithms can amplify misinformation, reinforce social and political polarization, or influence public opinion in harmful ways.

Practical Strategies for Implementing Ethical AI

Organizations can strengthen AI ethics by applying the following actionable strategies:

- Implement Fairness Checks in Model Training

Use auditing tools to detect disparities related to gender, race, age, or other sensitive attributes. - Adopt Strong Privacy Frameworks

- Encryption of sensitive data

- Data minimization practices

- Secure and compliant storage

- Clear, user-friendly consent policies

- Establish an AI Ethics Board

Bring together cross-functional experts who can evaluate risks, ensure accountability, and review systems before deployment. - Create Explainable AI Systems

Design models that provide clear reasoning behind their decisions, enabling transparency for users and regulators. - Monitor AI Continuously

Track real-world performance since AI behavior can drift or evolve over time, ensuring ongoing reliability and safety. - Promote Human–AI Collaboration

Allow humans to validate critical decisions instead of relying entirely on automated outputs. - Engage Users and Communities

Involve the people who will be impacted by the technology to gather feedback, address concerns, and build trust.

The Future of Ethical AI: What’s Coming Next?

The future of ethical AI will be shaped by greater transparency, stronger regulations, and privacy-preserving technologies. Explainability tools will become standard, helping users understand how AI makes decisions, while global laws will enforce fairness, safety, and responsible data practices. At the same time, methods like Differential Privacy and Federated Learning will protect sensitive information without limiting innovation.

Organizations will also face mandatory ethical audits to ensure accountability and reduce risks. Human-centered design will guide how systems are built, focusing on enhancing—not replacing—people. As AI grows more advanced, global investment in safety research will increase to address long-term challenges responsibly.

Conclusion: Ethical AI Is the Path to a Safer Digital Future

AI has incredible potential—but only if it is developed with clear ethical foundations. Bias, privacy risks, and irresponsible use can harm individuals and society. By implementing fairness, transparency, privacy protection, and accountability, we can build AI systems that truly enhance human life.

Ethical AI is not just about technology – it’s about trust, responsibility, and protecting the future of humanity. Responsible AI ensures that innovation benefits everyone, equally and safely.