AI Regulation 2.0 is the next wave of laws and standards designed to make AI safer, more transparent, and accountable across borders. It aligns governments and industry around practical rules—like risk-based oversight, impact assessments, and clear documentation—so AI can be innovative without causing harm. In short: the world is moving from loose principles to enforceable policy with real obligations, audits, and penalties, all aimed at safe, trustworthy AI.

What “AI regulation 2.0” really means

The first era of AI governance focused on ethics guidelines and voluntary frameworks. AI Regulation 2.0 shifts from “soft law” to binding rules—introducing penalties, independent oversight, and specific compliance steps organizations must follow. This helps close the gap between what companies say about responsible AI and what they actually do.

- Risk-based rules: Models and applications are judged by the risk they pose—general productivity tools face lighter rules; AI used in critical services (healthcare, hiring, public safety) faces strict controls.

- Transparency requirements: Organizations must document training data, model capabilities, limitations, and known risks, and disclose when users are interacting with AI.

- Accountability mechanisms: Clear lines of responsibility, independent audits, and incident reporting to regulators when something goes wrong.

- Safety and robustness: Testing for bias, reliability, security, and misuse—before deployment and continuously afterward.

- Human oversight: Ensuring critical decisions include meaningful human review where appropriate.

- International coordination: Common standards and benchmarks to reduce “regulatory friction” when operating across countries.

This second wave is more pragmatic: it aims to preserve innovation while addressing real-world harms and systemic risks.

Global policy landscape: where things stand

Different jurisdictions are moving at different speeds, but the direction is converging. Here’s how the major players are shaping AI Regulation 2.0.

European Union: A comprehensive, risk-based AI Act

- Scope: Covers providers, deployers, and importers of AI systems used in the EU market.

- Risk tiers: Prohibits some uses (like manipulative social scoring), sets strict rules for high-risk systems (e.g., in employment, education, critical infrastructure), and creates lighter obligations for limited-risk applications.

- Foundation models: Extra obligations for general-purpose models around transparency, safety, and risk mitigation.

- Enforcement: Significant fines, conformity assessments, and post-market monitoring.

United States: Sector-first with executive and agency guidance

- Approach: A mix of executive orders, NIST frameworks, and sector-specific rules (e.g., healthcare, finance, consumer protection).

- Focus: Safety testing, secure development, model evaluations, watermarking for synthetic content, and accountability in high-impact uses.

- Trend: Strong emphasis on voluntary standards that can become de facto requirements through procurement and enforcement actions.

United Kingdom: Principles-led, regulator-coordinated

- Strategy: Empower existing regulators (like ICO for data protection, FCA for financial services) instead of a single AI law.

- Emphasis: Innovation-friendly guardrails, sandboxing, and targeted guidance for high-risk applications.

- Practicality: Strong push for risk management, transparency, and safe deployment without stifling startups.

China: Rapid, iterative rulemaking

- Generative AI rules: Content responsibility, watermarking, and security reviews for large models.

- Algorithm regulation: Registrations and audits for recommendation systems.

- Compliance culture: Extensive documentation and monitoring, especially for public-facing applications.

International bodies: Principles aligning into standards

- OECD & G7: Shared principles on safety, accountability, and human rights; growing work on benchmarks and evaluations.

- UNESCO: Ethical AI recommendations influencing national adoption.

- ISO/IEC standards: Technical baselines for risk management, quality, and security emerging as practical compliance tools.

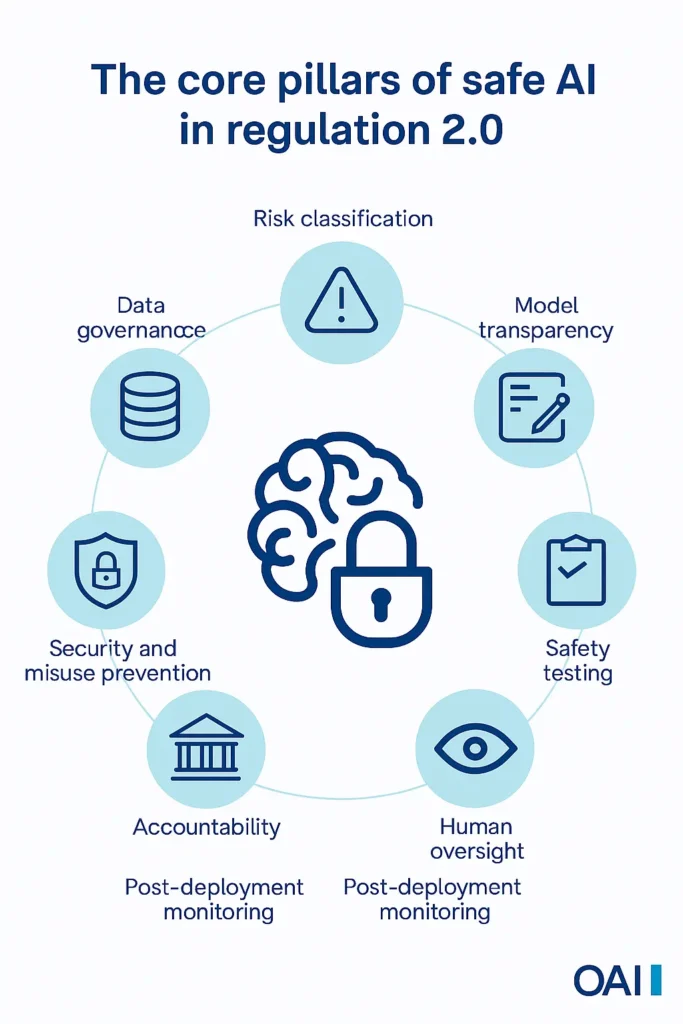

The big picture: while the legal texts differ, they’re converging on similar pillars—risk classification, transparency, testing, and accountability.

The core pillars of safe AI in regulation 2.0

Safe AI isn’t a slogan—it’s a set of concrete practices that make systems predictable, fair, and accountable. Regulation 2.0 centers on nine pillars that translate ethical intentions into operational requirements. Below, each pillar includes a clear overview and actionable bullets to help you implement it without slowing innovation.

Risk classification

Not all AI carries the same risk. Classifying systems by their potential impact determines how deep your controls must go.

- Define risk tiers: Minimal, limited, high, and unacceptable—based on potential harm to safety, rights, and critical services.

- Map use contexts: Evaluate where and how the AI is used (e.g., hiring vs. content tagging) to capture real-world exposure.

- Set obligations by tier: High-risk needs rigorous testing, documentation, and human oversight; limited-risk gets lighter guardrails.

- Document rationale: Record why a system was assigned its tier to support audits and future reclassification.

Data governance

Data shapes outcomes. Strong governance reduces bias, drift, and legal exposure while improving reliability.

- Provenance tracking: Maintain sources, licenses, consent bases, and transformations from raw to clean data.

- Quality management: De-duplicate, balance classes, and monitor coverage for underrepresented groups.

- Privacy controls: Minimize personal data, enforce retention limits, and apply anonymization where feasible.

- Bias mitigation: Use bias detection, counterfactual testing, and targeted augmentation to reduce disparities.

- Secure pipelines: Protect data at rest and in transit; restrict access with role-based controls and audit logs.

Model transparency

Transparency builds trust and enables oversight without exposing sensitive IP. Make capabilities and limits legible.

- Model cards: Summarize intended use, out-of-scope cases, known limitations, and benchmark results.

- Explainability: Provide local and global explanations, feature importance, and decision rationales for critical outcomes.

- Evaluation reporting: Share test methods, datasets, metrics, and caveats—especially for safety, robustness, and fairness.

- Update histories: Keep changelogs of retraining, parameter changes, and their measured effects.

- User-facing disclosures: Inform users when AI is involved and what confidence or uncertainty applies.

Safety testing

Testing turns assumptions into evidence. It must be continuous, adversarial, and aligned to the risk tier.

- Pre-deployment validation: Run accuracy, robustness, calibration, and fairness tests against representative scenarios.

- Adversarial red-teaming: Probe for jailbreaks, prompt injection, data poisoning, and misuse pathways.

- Stress and edge cases: Evaluate performance on rare or high-stakes inputs; measure worst-case behavior.

- Safety thresholds: Define acceptable ranges for error, bias, and security events; block release if thresholds aren’t met.

- Re-test on change: Re-run evaluations after updates or context shifts to prevent unnoticed regressions.

Human oversight

Humans remain accountable for consequential decisions. Oversight should be meaningful, not ceremonial.

- Decision gates: Require human review for high-risk outputs (e.g., credit denials, medical suggestions, hiring filters).

- Escalation paths: Route uncertain or low-confidence cases to qualified reviewers with domain expertise.

- Override mechanisms: Allow humans to correct, pause, or reverse automated actions and record the justification.

- Training and guidance: Equip reviewers with clear criteria, bias awareness, and tooling to interpret model explanations.

- User appeals: Provide channels for people to contest outcomes and trigger human re-evaluation.

Accountability

Clear ownership turns safety from “everyone’s job” into “somebody’s responsibility.”

- Named owners: Assign accountable leads for model risk, data governance, security, and compliance.

- Policies and SLAs: Define standards, performance targets, and response timelines for safety incidents.

- Audit readiness: Maintain evidence—docs, tests, logs—to demonstrate compliance at any time.

- Incident reporting: Specify detection thresholds, playbooks, and communication plans for internal and external stakeholders.

- Vendor accountability: Extend obligations to third-party providers; contract for transparency and remediation.

Security and misuse prevention

AI systems widen the attack surface. Security must cover models, data, prompts, and endpoints.

- Access controls: Use strong authentication, least privilege, and isolation for model endpoints and datasets.

- Prompt security: Guard against injection and context poisoning; sanitize inputs and compartmentalize system prompts.

- Abuse detection: Monitor for scraping, exfiltration, automated exploitation, and suspicious usage patterns.

- Rate limiting and quotas: Throttle high-risk operations to reduce automated abuse and cascading failures.

- Supply-chain hardening: Vet training data sources, libraries, and model artifacts; verify integrity and provenance.

User disclosure

People should know when AI shapes their experience and how to seek human help.

- Clear notices: Indicate AI involvement at decision points, not buried in terms.

- Role transparency: Explain whether AI is advisory, assistive, or determinative in the workflow.

- Limitations and risks: Communicate common failure modes, uncertainty, and appropriate user actions.

- Appeal options: Offer straightforward paths to human review, corrections, or opt-outs where applicable.

- Accessible language: Avoid jargon; write disclosures for the actual user, not compliance teams.

Post-deployment monitoring

Real-world performance drifts. Monitoring ensures safety doesn’t decay after launch.

- Operational metrics: Track accuracy, calibration, fairness, robustness, latency, and safety events over time.

- Drift detection: Compare live distributions to training data; trigger reviews when thresholds are crossed.

- Feedback loops: Capture user reports and expert audits; convert signals into prioritized fixes.

- Safe updates: Use staged rollouts, canaries, and automatic rollbacks for new model versions.

- Lifecycle governance: Define deprecation, archival, and retraining schedules with ongoing compliance checks.

What this means for businesses today

AI Regulation 2.0 is not just for legal teams—it changes how product, engineering, and operations work together. Here’s the practical impact.

- Product roadmaps: High-risk features may need more time for testing and documentation. Plan for gated releases and audit readiness.

- Procurement: Buyers will demand proof of safety, transparency, and compliance from vendors—expect questionnaires and model documentation.

- Liability and contracts: Vendors and deployers will need to split responsibility; expect clauses on risk treatment, incident response, and data/IP warranties.

- Talent: New roles emerge—AI safety engineers, model risk leads, compliance architects, evaluators, and prompt security specialists.

- Budgeting: Allocate resources for evaluations, red-teaming, documentation, and tooling (governance platforms, monitoring).

- Market advantage: Trust becomes a differentiator. Teams that can demonstrate safe, reliable AI will win contracts and consumer confidence.

A practical compliance roadmap (step-by-step)

Use this phased approach to align with the new policies without slowing innovation.

Phase 1: Discovery and classification

- Inventory AI systems: Catalog models, datasets, use cases, and user touchpoints.

- Classify risk: Tag systems as minimal, limited, or high-risk; document rationale.

- Define intended use: Write a clear purpose statement and prohibited uses.

Phase 2: Foundations and documentation

- Data provenance logs: Track sources, licenses, and consent; document cleaning and augmentation techniques.

- Model cards: Publish capabilities, limitations, benchmarks, and safety mitigations.

- Use and impact assessments: Evaluate fairness, privacy, security, and societal impacts before launch.

Phase 3: Safety and security controls

- Red-teaming: Simulate attacks, misuse, and adversarial prompts; record findings and fixes.

- Guardrails: Add content filters, policy alignment layers, and rate limits for public endpoints.

- Human-in-the-loop: Gate decisions where harm is possible; add review workflows and override paths.

Phase 4: Oversight and accountability

- Governance committee: Assign accountable owners across legal, security, product, and research.

- Incident management: Define thresholds, escalation, user communications, and regulator notifications.

- Vendor risk: Evaluate third-party models and APIs with the same rigor as in-house systems.

Phase 5: Monitoring and continuous improvement

- Operational metrics: Fairness, accuracy, robustness, latency, and safety indicators tracked over time.

- Feedback pipelines: Capture user reports and domain expert reviews; prioritize fixes.

- Model updates: Re-run evaluations after retraining or parameter changes; maintain changelogs.

Tools and techniques that help you comply

While policies are high-level, real compliance is built with practical techniques.

- Model and data documentation

- Label: Make knowledge portable

- Details: Use model cards, data sheets, and evaluation reports; keep them versioned.

- Evaluation and red-teaming

- Label: Prove reliability

- Details: Bias testing, adversarial evaluation, jailbreak resistance, safety alignment measurements.

- Risk controls

- Label: Reduce harm

- Details: Minimize sensitive attributes for decisioning, apply thresholding, and set guardrails for high-risk flows.

- Prompt and output moderation

- Label: Keep conversations safe

- Details: Policy filters, safety classifiers, and context-aware moderation with human escalation for edge cases.

- Security hygiene

- Label: Defend the stack

- Details: Protect model endpoints, use secrets management, add anomaly detection, and test for injection and exfiltration risks.

- Observability

- Label: See what the model does in the wild

- Details: Log prompts and outputs (privacy-aware), track drift, monitor failure modes, and watch for abuse patterns.

Debates and open questions

AI Regulation 2.0 is evolving. Expect discussion and refinement around these topics.

a) Foundation model obligations: How far should transparency go without exposing IP or sensitive architecture details?

b) Open-source vs. closed models: Balancing security and accountability with the value of openness and community audits.

c) Global interoperability: Reducing compliance burden for multi-country deployments while respecting local norms and laws.

d) Safety vs. speed: Avoiding “checkbox compliance” that slows innovation; building lightweight, effective safety processes.

e) Measuring harm and fairness: Agreeing on metrics that are context-aware and genuinely predictive of real-world outcomes.

f) Synthetic media and provenance: Scaling watermarking and content provenance in a way that’s robust yet privacy-preserving.

What “safe AI” looks like in practice

Concrete examples clarify how to apply the rules day to day.

- Hiring and HR

- Label: Minimize bias and explain decisions

- Details: Use diverse, representative training data; test for disparate impact; provide clear candidate explanations and appeal pathways.

- Healthcare support

- Label: Keep humans in control

- Details: Clinicians review AI suggestions; strong data governance; comprehensive post-deployment monitoring.

- Financial services

- Label: Document and audit models

- Details: Explain credit decisions; track model drift; ensure data lineage; adhere to fairness and anti-discrimination standards.

- Customer-facing chatbots

- Label: Disclose AI use

- Details: Clear notices, safety filters for sensitive topics, escalation to human agents for complex or risky queries.

- Content generation

- Label: Respect IP and privacy

- Details: Document training sources, apply watermarking where required, and provide provenance where feasible.

Preparing for the next 3–5 years

The trajectory is clear: more structure, more accountability, more collaboration.

- Convergence on standards: Technical standards (evaluation, transparency, safety) will become the lingua franca of compliance.

- Regulatory capacity building: Expect better-equipped regulators, faster guidance updates, and more consistent enforcement.

- Model evaluation ecosystems: Independent testing labs and certification programs will emerge and become important to buyers.

- Trust as a market differentiator: Organizations that can demonstrate safe, reliable AI will gain customers, partners, and regulatory goodwill.

- Responsible scaling: As models grow more capable, guardrails, provenance, and human oversight will scale in tandem.

The smartest move now is to build safety and compliance into your product development lifecycle—so you’re ready as rules tighten.

Conclusion

AI Regulation 2.0 is the global pivot from good intentions to enforceable action. It centers on risk, transparency, accountability, and safety—so AI can help people without harming them. Whether you’re building models or deploying them, the steps are clear: document what you’re doing, evaluate the risks, put guardrails in place, and keep humans involved where it matters. The organizations that embrace these principles now won’t just avoid penalties—they’ll earn trust, move faster with confidence, and shape the future of safe AI.

FAQs

1. What is AI Regulation 2.0 in simple terms?

AI Regulation 2.0 is the shift from voluntary ethics to enforceable rules. It requires risk-based oversight, transparency about how models work, documented testing, and clear accountability so AI is safe, fair, and trustworthy.

2. Who needs to comply with AI Regulation 2.0?

Compliance applies to the full chain: model providers, businesses deploying AI, vendors integrating AI features, and organizations offering AI-enabled services, especially in high-impact areas like hiring, healthcare, finance, and public services.

3. What are the first steps to become compliant?

- Inventory: List your AI systems, data sources, and use cases.

- Classify risk: Tag systems by risk level with rationale.

- Document: Create model cards and data sheets.

- Test: Run bias, robustness, and security evaluations.

- Oversight: Add human-in-the-loop for high-risk decisions and define incident response.

4. How does AI Regulation 2.0 affect innovation speed?

It adds structure but not roadblocks. Teams that build evaluation, documentation, and guardrails into development ship faster with fewer surprises, win trust sooner, and avoid costly redesigns triggered by unsafe behavior.

5. What are the penalties for non-compliance?

Penalties depend on jurisdiction but commonly include fines, enforced remediation, product restrictions, and reputational damage. Even where fines are low, losing buyer trust and market access is a bigger business risk than the penalty itself.