AI-driven cybersecurity models use machine learning, deep learning, and statistical analytics to detect, classify, and predict cyber threats as they emerge, enabling organisations to respond faster and reduce damage. These systems continuously learn from network telemetry, logs, endpoint signals, and external threat intelligence to spot anomalous patterns, prioritize incidents, and forecast attacker behavior in real time. The result is a shift from slow, signature-based defenses to adaptive, predictive protection that scales with modern cloud and hybrid environments.

What Are AI-Driven Cybersecurity Models?

AI-driven cybersecurity models are machine learning and deep learning systems trained on security data – logs, network traffic, endpoint telemetry, emails, identities, and more—to identify patterns that indicate attacks.

They can:

- Learn what “normal” looks like for your systems and users

- Spot anomalies that may signal an intrusion or compromise

- Correlate thousands of weak signals into one high-confidence alert

- Continuously improve as they see new threats

Why Real-Time Detection and Prediction Matter

The stakes are huge—and rising:

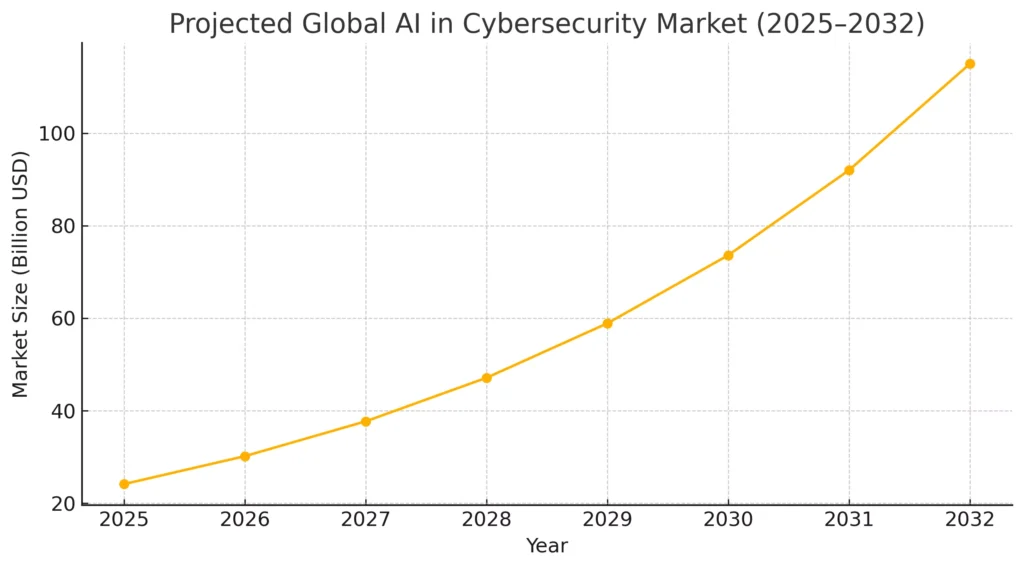

- The global AI in cybersecurity market is projected to reach around USD 115 billion by 2032, growing at ~20–30% CAGR, driven by the need for faster and smarter defenses.

- The average cost of a data breach hit about USD 4.88 million in 2024, the biggest annual spike since the pandemic.

- Around 61% of enterprises say they can’t perform intrusion detection without AI/ML, and 69% consider AI security urgent.

- By 2025, roughly 67% of organizations are using AI in cybersecurity, with about 31% using it extensively.

At the same time, attackers are also using AI:

- Reports show AI-generated phishing, deepfakes, and automated ransomware campaigns rising sharply, with some studies warning that 93% of businesses expect daily AI-driven attacks in the near term.

Real-time detection and prediction are no longer “nice to have.” They’re the only way to keep up with machine-speed attackers.

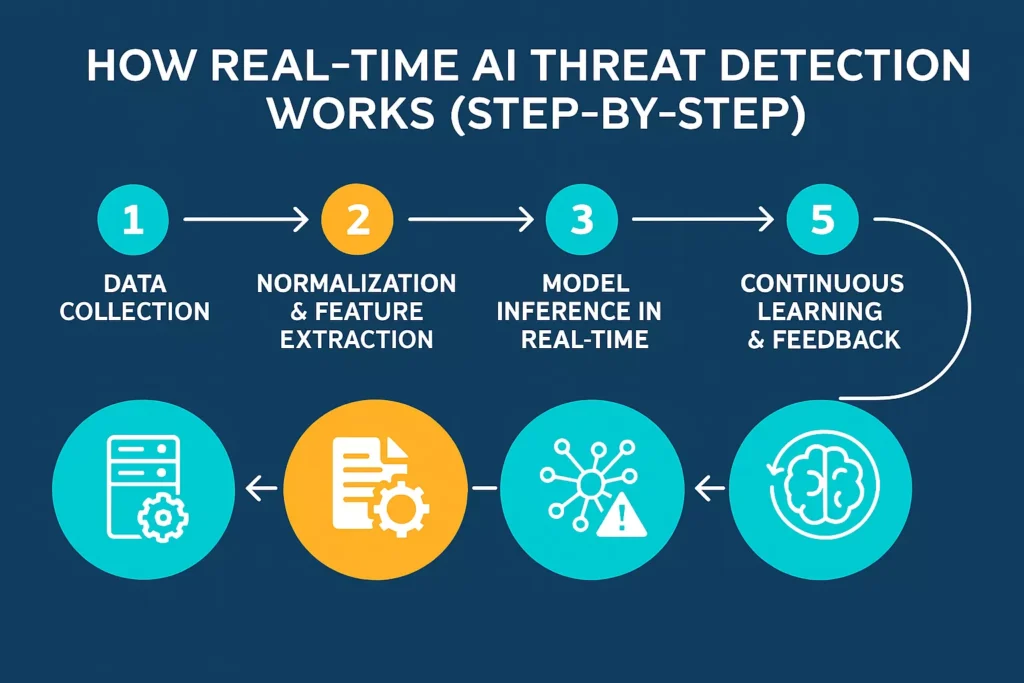

How Real-Time AI Threat Detection Works (Step-by-Step)

AI-driven cybersecurity operates through a structured, intelligent pipeline that transforms raw data into actionable security decisions within seconds. Each stage strengthens detection accuracy, reduces false positives, and equips security teams with deeper context. Below is a fully expanded breakdown of how real-time AI threat detection actually works.

Step 1: Data Collection

Real-time AI detection begins by gathering enormous amounts of security data from every layer of the digital ecosystem. This ensures full visibility across networks, devices, identities, and cloud environments. The more diverse and comprehensive the data, the more accurate the AI model becomes.

AI-driven security platforms continuously ingest information from:

- Firewalls, proxies, and VPN logs – to understand traffic flows, blocked connections, and suspicious access attempts.

- Endpoint agents (EDR/XDR) – which provide rich telemetry about processes, applications, file changes, and device activity.

- Identity systems (SSO, Active Directory, IAM) – to monitor logins, privilege escalations, and potential account misuse.

- Cloud platforms (AWS, Azure, GCP logs) – capturing API calls, storage access, configuration changes, and cloud workload behavior.

- Email gateways & collaboration tools – detecting phishing, malicious attachments, unusual sender patterns, or compromised accounts.

- OT/ICS devices in industrial environments – critical for spotting anomalies in manufacturing, energy, or transport systems.

This massive and continuous data collection forms the foundation of real-time AI detection.

Step 2: Normalization & Feature Extraction

Raw logs gathered from different systems are inconsistent, noisy, and difficult for a model to interpret directly. The AI engine first cleans and standardizes this data, then extracts meaningful signals that help detect suspicious behavior.

During this stage, the system derives structured features such as:

- Number of failed login attempts within a specific time window

- Volume of outbound data transferred per user or device

- Frequency of new process executions or unusual system commands

- Geolocation and device fingerprints associated with login sessions

- Patterns based on time-of-day or day-of-week usage

By converting chaotic logs into precise metrics, AI models gain the clarity needed to identify subtle indicators of compromise that human analysts often miss.

Step 3: Model Inference in Real Time

Once features are extracted, the AI model analyzes live events or batches of events to determine whether activity is normal or potentially malicious. This is where real-time intelligence truly comes into play.

In this step, the model performs several tasks:

- Assigns a probability score indicating how likely the behavior is malicious.

- Generates a risk rating (e.g., 0–100) to help analysts prioritize serious threats.

- Correlates data across multiple sources, such as linking a suspicious login to an abnormal process start or data transfer.

- Groups related alerts into a single incident, reducing noise and preventing alert fatigue.

This correlation is critical: an isolated event might appear harmless, but when combined with dozens of small signals, AI can reveal the early stages of an attack.

Step 4: Automated Response & SOAR

If the system identifies high-risk behavior, the AI engine can take immediate action—often within seconds. Security Orchestration, Automation, and Response (SOAR) tools work alongside AI to execute predefined playbooks automatically.

Based on severity thresholds, the system can initiate actions such as:

- Account lockout or multi-factor authentication challenge to stop account takeover.

- Quarantining an endpoint to isolate a compromised device from the network.

- Blocking a suspicious process, IP address, or malicious file before damage spreads.

- Automatically generating a detailed incident ticket with full context for SOC analysts.

This rapid response drastically reduces Mean Time to Respond (MTTR) and prevents attackers from escalating their access.

Step 5: Continuous Learning & Feedback

AI-driven cybersecurity becomes smarter over time. Human feedback, new data, and updated threat intelligence continuously enhance model performance.

As analysts review alerts and mark them as true or false positives, the system learns to:

- Improve detection accuracy by understanding the difference between benign anomalies and real threats.

- Reduce false positives, ensuring security teams are not overwhelmed by noise.

- Refine prioritization, highlighting only the incidents with genuine impact or urgency.

With every cycle of learning, the AI models become more aligned with your organization’s behavior patterns, infrastructure, and evolving threat landscape.

How AI models fit into a modern cybersecurity stack

- Data collection layer: network flows, DNS queries, authentication logs, endpoint telemetry, cloud API calls, and external threat feeds.

- Feature extraction and enrichment: parsing raw telemetry into meaningful features (e.g., unusual process parent-child relationships, spikes in outbound traffic, or atypical login locations).

- Model inference layer: real-time scoring using ML/DL models to flag anomalies, classify threat types, and estimate risk.

- Orchestration and response: automated playbooks, SOAR integrations, and human analyst workflows for triage and remediation.

This layered integration allows AI models to augment existing SIEM, EDR, and NDR platforms rather than replace them, making adoption smoother and less disruptive to operations.

Core AI techniques used for real-time detection and prediction

a) Supervised learning for known-threat classification

Supervised models (logistic regression, random forests, gradient-boosted trees, and deep neural networks) are trained on labeled attack and benign examples to classify events quickly. These excel when you have high-quality labeled data for common threats and are commonly used for phishing detection, malware classification, and malicious URL detection.

b) Unsupervised and semi-supervised models for anomaly detection

Because many new attacks are unseen, unsupervised methods — clustering, autoencoders, and one-class models — detect deviations from learned “normal” baselines. Semi-supervised approaches combine limited labeled samples with large unlabeled datasets to improve sensitivity without requiring exhaustive annotation.

c) Deep learning for sequence and behavior modeling

Recurrent neural networks (RNNs), transformer-based models, and temporal convolutional networks capture sequences (e.g., command sequences, API call chains, authentication events) to identify suspicious behavior that single-event models miss.

d) Graph-based models for relationship analysis

Attackers leave relational traces (e.g., lateral movement across hosts, shared infrastructure). Graph neural networks (GNNs) and other graph analytics reveal hidden connections across entities — users, devices, processes, and IPs — improving detection of coordinated campaigns and supply-chain compromises.

e) Hybrid architectures and ensembles

Production systems often combine multiple models — supervised classifiers, anomaly detectors, and rule-based filters — into ensembles to balance precision and recall, reduce false positives, and surface higher-confidence alerts for analysts.

Real-time constraints and engineering trade-offs

Real-time detection introduces strict performance and latency requirements. Models must balance:

- Latency vs complexity: Deep models can be accurate but slower; lightweight models or distilled versions may be used at the network edge for instant scoring, while heavier models run asynchronously for deeper analysis.

- Precision vs recall: Aggressive detection catches more attacks but increases false positives, overloading analysts. Tunable thresholds, risk-scoring, and dynamic baselines help maintain operational balance.

- Explainability vs performance: Security teams need understandable alerts. Techniques like SHAP, LIME, or model simplification are used to provide human-readable rationales without sacrificing detection quality.

- Data privacy and compliance: Sensitive telemetry must be processed in privacy-preserving ways (on-prem inference, anonymization, or federated learning) to meet regulations and internal policies.

Data: the fuel of accurate prediction

High-quality, diverse, and timely data is the single most important factor in AI-driven cybersecurity success. Important data practices include:

- Normalizing and enriching telemetry with context (asset criticality, user roles, geolocation).

- Labeling with consistent taxonomies and investing in expert review to improve supervised learning.

- Continuous feedback loops from analysts to retrain models and correct drift.

- Incorporating external threat intelligence (IOC feeds, vulnerability disclosures) to surface indicators that models alone might miss.

Organisations that treat data pipelines as first-class systems — with monitoring, versioning, and retraining schedules — achieve sustained performance improvements.

Use cases: detection to prediction

1. Real-time intrusion and lateral movement detection

By combining endpoint telemetry and network flows, AI models detect early anomalies (credential misuse, odd process creation patterns) and predict possible lateral movement paths, allowing containment before sensitive assets are reached.

2. Automated phishing and malicious content filtering

Natural language models and URL classifiers evaluate email content, attachments, and linked pages in real time to block phishing attempts and train users through simulated campaigns.

3. Zero-day and polymorphic malware detection

Behavioral models that focus on runtime behavior (API calls, memory usage patterns) can catch malware variants that signatures miss by recognizing malicious execution patterns rather than static signatures.

4. Threat hunting and campaign attribution

Graph and sequence models help threat hunters link discrete incidents into campaigns and attribute tactics, techniques, and procedures (TTPs) to likely adversary groups based on historical behavior.

5. Predictive vulnerability exploitation forecasting

By combining vulnerability databases, exploit release timings, and observed attacker behavior, organizations can forecast which vulnerabilities are likely to be weaponized next and prioritize patches accordingly.

Interpreting alerts: reducing analyst fatigue

AI systems must present actionable, context-rich alerts to be useful. Good alerts include:

- A clear risk score and why the event was flagged (top contributing features).

- Related entities and historical context (previous alerts, recent changes on the host).

- Suggested remediation steps and automated playbooks for common, low-risk actions.

- Integration into analyst tools with quick investigative queries and kill-chain visualizations.

This reduces mean-time-to-detect (MTTD) and mean-time-to-response (MTTR) by focusing human attention on the highest-value investigations.

Model lifecycle: deployment, monitoring, and retraining

A production-ready AI model follows a lifecycle:

- Development: feature engineering, training with cross-validation, and performance evaluation on realistic test sets.

- Validation: red-team testing, adversarial robustness checks, and measuring performance across diverse environments.

- Deployment: staged rollout with canary hosts and throttled alerting to gauge impact.

- Monitoring: continuous metrics for model drift, false positive rates, and latency.

- Retraining and governance: scheduled retraining with fresh labels, version control, and audit trails for reproducibility.

Governance and explainability requirements often demand an audit-ready trail showing when models changed, why, and how they performed over time.

Threats to AI models and hardening strategies

AI models themselves are attack surfaces. Common adversarial risks and mitigations:

- Poisoning attacks: attackers inject malicious data into training pipelines. Mitigation: data provenance, robust validation, and outlier detection during training.

- Evasion attacks: input crafted to bypass detection. Mitigation: adversarial training, ensemble defenses, and input preprocessing.

- Model theft and privacy leakage: sensitive training data can be exfiltrated via model queries.

- Mitigation: rate limiting, differential privacy, and restricting access to model endpoints.

- Overfitting to lab conditions: models that perform well in controlled tests fail in the wild. Mitigation: diverse training datasets, realistic simulation of attacker behavior, and ongoing field testing.

A threat model for the ML pipeline should be part of security architecture planning.

Human + AI collaboration: the best outcomes

AI is most effective as an augmentation to human expertise. Practical collaboration patterns include:

- Analyst-in-the-loop workflows where AI prioritizes and suggests hypotheses while humans validate and adapt rules.

- Active learning loops where analysts label edge cases and feed them back into the training dataset.

- Playbook automation for repetitive low-risk tasks (quarantine host, block IP) while reserving complex decision-making for humans.

This collaborative approach reduces alert fatigue and ensures that models learn from expert judgement.

Real-world results and evidence

Organisations deploying AI-driven detection consistently report faster detection times, improved prioritization, and reduced workload for security teams. Academic and industry reviews show AI methods enhancing detection coverage and adapting to evolving threats, though success depends heavily on data maturity and operational integration.

Practical roadmap to adopt AI-driven detection

Adopting AI-driven cybersecurity requires a strategic and structured approach. Jumping in without a roadmap can lead to wasted investments, poor detection quality, and operational chaos. Below is a clear, elaborative guide that helps organizations implement AI security smoothly and effectively.

Process 1: Define Clear Use Cases

The biggest mistake organizations make is trying to apply AI everywhere at once. Instead, begin with a focused, high-impact problem that AI can solve immediately. Start small, learn fast, and expand gradually.

Common high-value use cases include:

- Intrusion detection enhancement – improving real-time detection of unusual network activity.

- Phishing and email security – catching advanced phishing, BEC attacks, and malicious attachments.

- UEBA (User and Entity Behavior Analytics) – identifying insider threats or compromised accounts based on unusual behavior.

- Ransomware prevention – detecting early-stage indicators like rapid encryption changes or suspicious process activity.

Choosing 1–2 use cases ensures tighter control, cleaner testing, and faster measurable results.

Process 2: Assess Your Data Foundation

AI models are only as strong as the data feeding them. Before implementing any AI-based system, you must ensure the security data infrastructure is robust, well-organized, and comprehensive.

Key questions to evaluate your data readiness:

- Are critical logs centralized?

Platforms like SIEM and XDR should collect logs from endpoints, firewalls, identities, and cloud systems in one place. - Are logs structured, normalized, and retained long enough?

Short retention periods or poor formatting reduce the effectiveness of AI analysis. - Do we have visibility across endpoints, identity, network, and cloud?

Missing visibility creates blind spots that no AI system can compensate for.

If these fundamentals are weak, enhance logging, monitoring, and data hygiene first. AI won’t deliver meaningful results without clean, complete data.

Process 3: Choose the Right Tools

Once your data foundation is ready, selecting the appropriate AI tools is crucial. The right choice depends on your environment, budget, and maturity level.

You can explore three main options:

- Native AI features within existing platforms

(e.g., SIEM, XDR, email gateways, cloud security tools)

This is ideal for quick wins and minimal disruption. - Specialized AI-driven solutions

(e.g., UEBA tools, OT/ICS anomaly detection, fraud prevention platforms)

These provide deeper intelligence for specific risks. - Custom-built AI models

Developed by in-house data science teams using organizational data.

This offers maximum flexibility but requires high expertise.

For most organizations, the fastest success comes from using off-the-shelf AI security tools and enhancing them with proper tuning and integrations.

Process 4: Pilot, Then Scale

A pilot phase helps validate AI effectiveness while minimizing operational risk. It allows you to test detection accuracy, understand workflows, and fine-tune configurations before broader deployment.

During the pilot, focus on:

- Starting in detection-only mode

Observe how the system identifies threats without triggering automated actions. - Measuring false positives and missed alerts

This helps refine thresholds and ensure practical value. - Tuning rules and model parameters

Each environment is unique—fine-tuning reduces noise and improves accuracy. - Gradually enabling automation for low-risk actions

Examples: isolating suspicious email attachments or flagging unusual logins.

Once the pilot shows consistent value, expand to more systems, use cases, and automated playbooks.

Process 5: Integrate With SOC and SOAR

AI achieves its full potential only when it is tightly integrated into your security operations workflow. The goal is not to replace human analysts but to empower them with faster, deeper insights.

Ensure that AI security outputs:

- Flow directly into your SIEM/XDR dashboards

to maintain unified visibility. - Trigger automated responses via SOAR playbooks

allowing fast containment of routine threats. - Include rich contextual information

such as user history, asset criticality, and threat intelligence.

A seamless integration ensures analysts spend less time on repetitive tasks and more time on proactive threat hunting.

Process 6: Track Metrics

To measure success and guide long-term improvement, organizations must continuously monitor critical performance indicators. These metrics prove the value of AI and justify further investment.

Track metrics such as:

- Mean Time to Detect (MTTD) – how quickly threats are identified.

- Mean Time to Respond (MTTR) – how quickly they are contained.

- False positive rate – a key indicator of model quality and analyst workload.

- Incidents detected exclusively by AI – showcasing the system’s unique value.

- Overall business impact

including breaches prevented, reduced downtime, or financial losses avoided.

Analyzing these metrics helps refine detection models, improve automation workflows, and strengthen your overall security posture.

Ethical, legal, and privacy considerations

AI systems must respect privacy and comply with regulations. Key points:

- Limit collection to data needed for detection and employ anonymization where possible.

- Maintain clear policies for model decisions that affect user access or employment.

- Be transparent with stakeholders about automated decision-making and provide appeal mechanisms where outcomes have material impact.

- Consider biases in models (e.g., flagging certain user groups more often) and audit for fairness periodically.

Ethical design fosters trust and reduces operational and legal risk.

The future: prediction-driven defense and autonomous response

The trajectory of AI in cybersecurity is toward greater prediction, context-aware defense, and safer automation. Expect:

- Improved cross-organization intelligence sharing (privacy-preserving federated learning) to spot emerging threats earlier.

- More sophisticated behavioral baselines that blend business context with security signals.

- Safer autonomous responses where low-risk containment actions are executed automatically while high-risk decisions remain human-supervised.

- Wider adoption of causal and counterfactual models to understand attacker intent and anticipate next steps rather than only react.

These advances will make defenses more proactive and resilient across complex, interconnected systems.

Conclusion

AI-driven cybersecurity models transform reactive defenses into adaptive, predictive systems that detect threats in real time and forecast attacker behavior. Success hinges on data quality, integration with analyst workflows, careful governance, and ongoing adversarial hardening. When implemented thoughtfully, AI augments human defenders — reducing detection times, prioritizing the highest-risk incidents, and enabling organizations to stay a step ahead of increasingly automated threats.