Artificial Intelligence (AI) is the capability of machines or software to perform tasks that normally require human intelligence – such as understanding language, recognising patterns, learning from experience, making decisions, and adapting to new situations. In short: it’s a way of giving computers a kind of “smartness” that lets them act, in some sense, like humans or better.

Why the term “Artificial Intelligence” matters

The phrase “artificial intelligence” combines two core ideas:

- Artificial — meaning created by humans, not naturally occurring.

- Intelligence — meaning the ability to learn, reason, adapt, perceive, and make decisions.

When we say a system is “AI”, we’re implying that it goes beyond simple programmed rules and can handle complexity, change, uncertainty, or data in ways that mimic some aspects of what humans do.

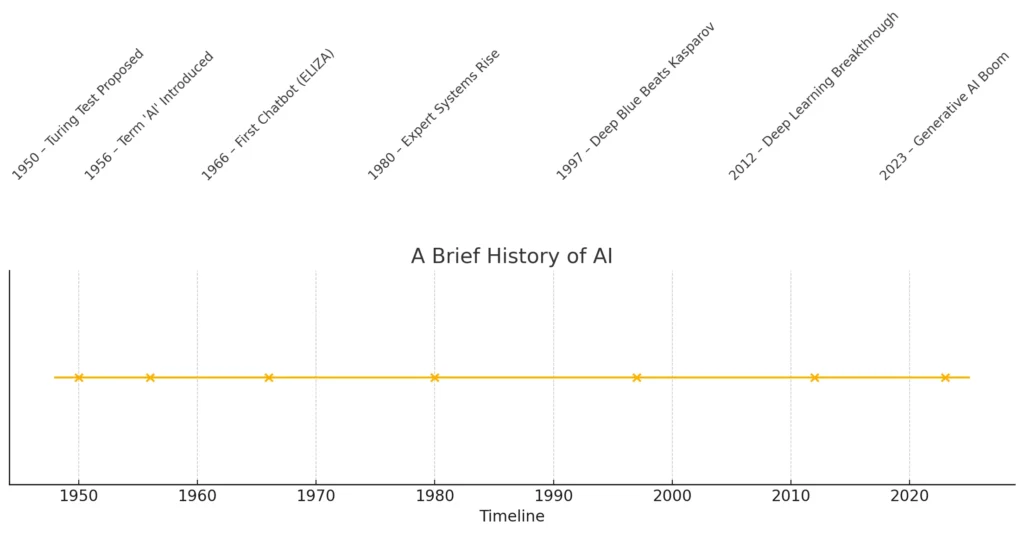

A brief history of AI

Understanding AI’s roots helps clarify what it is and what it isn’t.

- In the 1950s, researchers such as Alan Turing asked the question: Can machines think?

- Early AI focused on symbolic logic and rule-based systems: “If this, then that.”

- Through the decades the field moved through cycles of optimism, difficulty (sometimes called “AI winters”), new methods (neural networks, machine learning), and renewed hype.

- Today’s modern AI often involves “learning from data” using statistical and computational techniques.

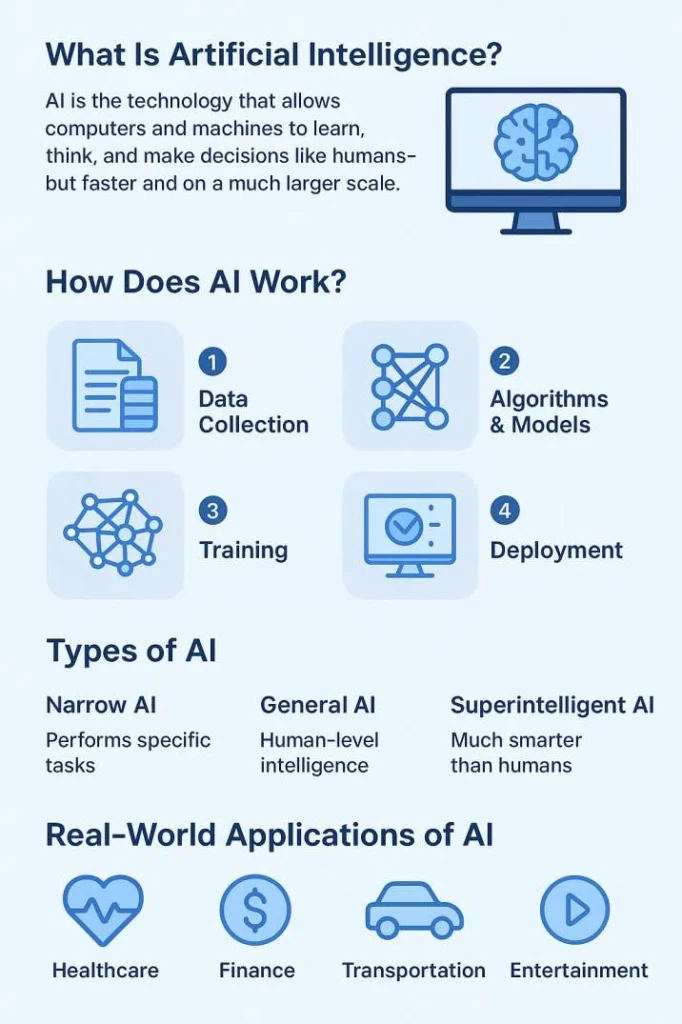

Key components of AI

To get a practical handle on AI, it helps to break it down into parts:

- Data

AI systems depend heavily on data: text, images, numbers, signals. Without data, there’s nothing to learn from. - Algorithms / Models

These are the “recipes” that define how the system uses the data and what it tries to output — e.g., predicting a label, recognising an image, generating text. - Learning / Adaptation

Rather than being hard-coded for every scenario, many AI systems improve their performance over time by learning from examples, errors, feedback. - Inference / Decision-making

After learning, the AI applies its model to new inputs to make predictions or decisions—for example, identifying a cat in a photo, recommending a product, or driving a car. - Human & Ethical Context

AI does not operate in a vacuum. It interacts with human values, work, systems, environments. Thus we must consider trust, bias, safety, transparency.

Types and Categories of AI (Beginner-Friendly Guide)

Artificial Intelligence (AI) can be classified in two major ways:

- By Capability – How much intelligence the system has

- By Functionality / Techniques – How the system works or what type of model it uses

Let’s explore both in a simple, clear way.

1. Types of AI by Capability

This classification shows how advanced the AI is compared to human intelligence.

A. Narrow AI (Weak AI)

Definition: AI designed to perform one specific task extremely well.

Examples:

- Siri / Google Assistant

- Chatbots

- Image recognition

- Spam filters

- Recommendation systems (Netflix, YouTube)

Why it matters:

This is the AI we use today. Very powerful, but limited to specific tasks.

B. General AI (Strong AI)

Definition: AI that can think, understand, and learn just like a human across any task.

It does not exist yet.

Capabilities (theoretical):

- Understands context like humans

- Learns multiple skills on its own

- Makes independent decisions

Status:

Researchers are exploring this, but it may be many years away.

C. Super AI (Artificial Superintelligence)

Definition: AI that becomes smarter than humans in every field—science, creativity, decision-making.

Status:

Purely theoretical, not yet real.

Why it’s discussed:

It raises ethical questions about control, safety, and future risk.

2. Types of AI by Functionality / Techniques

These categories explain how AI works internally.

A. Reactive Machines

Definition: The simplest AI systems that react only based on current input.

Characteristics:

- No memory

- No learning

- Only reacts to situations

Example:

Deep Blue (the chess computer that beat Kasparov)

B. Limited Memory AI

Definition: AI that learns from past data and improves over time.

Characteristics:

- Learns patterns

- Makes predictions

- Used in modern AI models

Examples:

- Self-driving cars

- ChatGPT models

- Image recognition systems

Why it’s important:

This is the most common type of AI today.

C. Theory of Mind AI (Future concept)

AI that understands human emotions, beliefs, social interactions.

Example:

Not yet developed, but research is ongoing.

D. Self-Aware AI (Future concept)

AI that has its own consciousness.

Status:

Completely theoretical.

3. Categories of AI by Technology / Techniques

This classification explains how AI systems are built.

A. Machine Learning (ML)

AI that learns from data without explicit programming.

Types of ML:

- Supervised Learning: Learns from labeled data (e.g., spam vs non-spam)

- Unsupervised Learning: Finds patterns in unlabeled data

- Reinforcement Learning: Learns by trial and error (used in gaming, robotics)

Examples:

- Email spam filters

- Stock prediction

- Fraud detection

B. Deep Learning

A subset of ML that uses neural networks with many layers.

Used for:

- Image recognition

- Speech recognition

- ChatGPT-style models

- Medical image diagnosis

It powers the biggest AI breakthroughs.

C. Natural Language Processing (NLP)

AI that understands and generates human language.

Examples:

- ChatGPT

- Google Translate

- Voice assistants

- Text summarization

D. Computer Vision

AI that understands images and videos.

Examples:

- Face detection

- Medical image scanning

- Self-driving car cameras

E. Robotics

AI + mechanical components to perform actions.

Examples:

- Industrial robots

- Delivery robots

- Humanoid robots

F. Expert Systems

AI systems that use if–then rules to make decisions.

Example:

Early medical diagnosis systems.

AI is not a single monolith; we can categorise it in a few useful ways.

| Category Type | Meaning | Examples |

|---|

| Narrow AI | AI for one task | Chatbots, assistants |

| General AI | Human-like intelligence | Not invented yet |

| Super AI | Smarter than humans | Theoretical |

| Reactive Machines | No memory | Deep Blue |

| Limited Memory | Learns from data | Modern AI models |

| ML / Deep Learning | Learns from data, patterns | AI apps today |

| NLP | Understands text & speech | ChatGPT |

| Computer Vision | Understands images | Face recognition |

| Robotics | AI + mechanics | Industrial robots |

Everyday examples of AI

To make this less abstract, here are some concrete uses:

- When you ask a voice assistant like Siri or Google Assistant a question, it uses AI to understand your speech and respond.

- When streaming platforms show you “Recommended for you” content, they’re using AI to analyse what you’ve watched and predict what you’ll like.

- When banks flag unusual transactions for fraud, they use AI to spot patterns that deviate from typical behaviour.

- In healthcare, AI can help identify patterns in medical images, assist diagnosis, or suggest treatments (always with human oversight).

- In manufacturing, AI can optimise supply chains, predict maintenance needs, and improve efficiency.

Why AI is growing fast – and some numbers

AI is growing rapidly because of three major drivers: more data, more computing power, and better algorithms. Here are some statistics:

- In 2024, 78% of organisations reported using AI in at least one business function—up from 55% the year before.

- The global AI market is projected to reach about US $757.6 billion in 2025 and exceed US $1.8 trillion by 2030.

- 72% of companies worldwide use AI in at least one business function.

- 90% of tech workers report using AI in their jobs.

These numbers show that AI is no longer niche—it’s becoming mainstream.

Benefits of AI

Using AI offers many advantages:

- Efficiency: AI can automate repetitive tasks, freeing humans for higher-value work.

- Accuracy: In some domains (e.g., image recognition), AI can reach or exceed human accuracy.

- Scale: AI handles large volumes of data and decisions at speeds humans cannot match.

- Personalisation: AI enables experiences tailored to individuals (recommendations, targeted ads, adaptive learning).

- Innovation: AI opens new possibilities—autonomous vehicles, smart cities, new drugs, novel content forms.

Challenges and risks to know

AI is powerful, but it also brings important risks:

- Bias: If the training data is biased, AI decisions can be unfair, discriminatory.

- Transparency / Explainability: Many AI systems (especially deep learning) act as “black boxes” and it can be hard to understand why they made a decision.

- Job displacement: While AI will create jobs, it may also change or eliminate existing roles.

- Safety & control: As systems become more autonomous, ensuring they behave as intended becomes harder.

- Privacy and misuse: AI can be misused—surveillance, deepfakes, manipulation.

- Energy / Environmental cost: Training large AI models uses significant computing energy and infrastructure.

For example: A survey found that 51% of organisations using AI experienced at least one negative consequence, with nearly one-third reporting issues due to inaccuracy.

How does AI actually “learn”? (in simple terms)

Here’s a beginner-friendly breakdown of how an AI system often learns:

- Collect Data: For example, thousands of images of cats and dogs, each correctly labelled.

- Pre-process Data: The images might be resized, cleaned, converted into formats usable by the model.

- Choose or Build a Model: Pick a neural network architecture or other learning algorithm.

- Train the Model: The model sees the images, makes predictions (dog vs cat), compares to the correct label, and adjusts its internal parameters (weights) to reduce error.

- Validate / Test: Use separate data (images the model hasn’t seen) to check how well it generalises.

- Deploy / Inference: Use the trained model on new images to predict “dog” or “cat”.

- Monitor & Refine: Over time, gather more data, retrain, fix mistakes, adapt to changes.

This learning process means the AI improves with more (and better) data, and adapts to new patterns.

How AI works under the hood (without heavy jargon)

To help you visualise what goes on behind the scenes:

- Think of a neural network as a series of layers of “neurons” (computational units) that pass information from input to output.

- At training time, each neuron adjusts how it reacts to inputs (via weights) based on feedback (errors).

- The process is somewhat like how the human brain learns patterns (though biologically very different).

- Many different architectures exist: convolutional neural networks (for images), recurrent networks (for sequences), transformers (for language)—but all share the idea of data → model → prediction → feedback.

What AI is not (clearing common myths)

- AI is not magic. It doesn’t understand in the human sense—it analyses patterns.

- AI is not always unbiased or “fair” by default—its quality depends on data and design.

- AI is not a replacement for humans in all tasks—it’s a tool, often best when humans and machines collaborate.

- AI is not a single “smart brain” that controls everything. Most systems are narrow and built for specific domains.

The impact of AI on jobs and workforce

AI will change how we work. Some insights:

- Many job roles will evolve: tasks framed more in terms of human-machine collaboration.

- A report found that up to 30 % of hours worked in the U.S. economy could be automated by 2030.

- At the same time, the demand for “AI-related roles” (data engineers, ML engineers) is growing rapidly.

- Therefore, adapting skills (data literacy, AI awareness, domain knowledge) becomes important.

AI in different industries

Here is how AI is being applied across sectors:

- Healthcare: AI helps detect disease (e.g., scanning images), predict patient outcomes, personalise treatment.

- Finance: Fraud detection, algorithmic trading, customer support chatbots.

- Retail & e-commerce: Personalised recommendation engines, inventory optimisation, customer service bots.

- Manufacturing & logistics: Predictive maintenance of machines, route optimisation, automation of repetitive tasks.

- Media & entertainment: Content generation, editing, language translation, virtual assistants.

- Transportation: Autonomous driving, route planning, traffic management.

How to start learning about AI (for beginners)

If you’re curious and want to dive in, here’s a simple path:

- Get comfortable with basic concepts: What is data, algorithm, model?

- Explore some online tutorials: Many free courses introduce machine learning and AI.

- Try small projects: Classify images, build a simple chatbot, explore datasets.

- Learn key tools: Python, libraries like TensorFlow or PyTorch, Jupyter notebooks.

- Understand ethical and responsible AI: Read about bias, fairness, transparency.

- Stay updated: AI is fast-moving. Read blogs, articles, follow reputable sources.

- Consider your domain: How does AI apply in areas you care about (business, health, education, arts)?

AI vs Machine Learning vs Deep Learning (Clear Comparison)

| Technology | What It Means | Example |

|---|---|---|

| AI | Machines showing intelligence | ChatGPT, robots |

| Machine Learning | Algorithm learns patterns | Spam email filters |

| Deep Learning | Multi-layer neural networks | Face recognition |

The future of AI – what to expect

Predicting the future is risky, but some trends are clear:

- AI will become more embedded in everyday products and services.

- Generative AI (creating images, text, music) will grow in capability and adoption.

- More focus on explainability, fairness, governance, and regulation—because as AI becomes powerful, the stakes rise.

- The value won’t just come from isolated AI tools, but from how organisations redesign workflows and integrate AI end-to-end. For example, companies that redesign workflows around AI tend to extract more value.

- AI infrastructure demands (data centres, computing power, energy) will continue to scale.

Key terms you should know

- Model: A mathematical representation that makes predictions or decisions.

- Training: The process of teaching a model using data.

- Inference: Using the trained model to make predictions on new data.

- Supervised learning: Learning from labelled data (input–output pairs).

- Unsupervised learning: Finding patterns without labelled output.

- Reinforcement learning: Learning by trial and error, receiving rewards/punishments.

- Neural network: A model structure inspired (loosely) by the human brain’s networks.

- Deep learning: Using neural networks with many layers.

- Generative AI: The branch of AI that generates new content (images, text, audio).

- Bias (in AI): Systematic error introduced by data or algorithm that leads to unfair outcomes.

- Explainability: The ability to understand how a model reached its decision.

AI ethics and governance

AI ethics and governance is all about making sure AI behaves in a safe, fair, and responsible way as we use it in daily life. Think of it like setting rules for a powerful new technology so it doesn’t harm people or make unfair decisions. Ethics focuses on what’s “right and wrong” for AI, while governance creates the policies, checks, and accountability systems that keep AI under control.

Together, they help protect your privacy, reduce bias, make AI more transparent, and ensure humans remain in charge—especially when AI is used in important areas like healthcare, jobs, finance, or security. In simple terms, AI ethics and governance exist to make AI trustworthy, helpful, and safe for everyone.

Supported by global standards like the EU AI Act and UNESCO guidelines –

- AI should treat everyone fairly—no bias or discrimination

- Your data and privacy must be protected

- AI decisions should be clear, not confusing “black boxes”

- Humans should always have the final say in important decisions

- Companies must take responsibility if AI causes harm

- Rules, audits, and guidelines help keep AI under control

- Prevents misuse like deepfakes, scams, or unfair surveillance

- Helps build AI that people can trust

Final thoughts

In essence, artificial intelligence is a powerful set of tools that allow machines to do things that once were purely human territory: learning from experience, recognising patterns, making decisions. Its adoption is accelerating, and its impact is real in business, society, and our daily lives.

But it’s also a tool that must be used with care. The data it uses, the design choices made by developers, the context of its deployment—all matter deeply. For a beginner, thinking of AI simply as “smart computer programs that learn and act” is enough to start. From there, you can deepen your understanding, explore hands-on, and engage with both its promise and its responsibilities.

FAQs

1. Is AI dangerous?

AI is not dangerous on its own, but it can become risky if used irresponsibly. Problems arise when AI is trained on biased data, used to spread misinformation, or deployed without proper supervision. With clear rules, transparency, and human oversight, AI remains safe and beneficial.

2. Will AI replace jobs?

AI will replace some repetitive and routine jobs, but it will also create many new roles in technology, automation, design, and data handling. Instead of eliminating work, AI changes the type of skills needed. People who adapt and learn AI tools will benefit the most from this shift.

3. Do I need coding to learn AI?

No, you don’t need coding to start learning AI. Beginner-friendly tools like ChatGPT, Gemini, Midjourney, and Canva AI allow anyone to use AI without programming. Coding becomes helpful only if you want to build advanced AI models or pursue an AI engineering career.

4. Is AI always accurate?

AI is not always accurate because it depends on the quality of the data it was trained on. Errors can happen when AI lacks context, receives incomplete information, or interprets questions incorrectly. AI is a powerful assistant, but it still needs human review for important decisions.

5. Can AI think like humans?

No, AI cannot think or feel like humans. It does not have emotions, consciousness, or personal opinions. AI works by analysing patterns in data and generating the most likely response. It can mimic human behavior but does not have true understanding.