Generative Audio AI refers to artificial intelligence systems that can create human-like voices, realistic sound effects, and high-quality music automatically. These models learn from massive audio datasets, understand patterns in sound, and generate new audio that feels natural, expressive, and extremely lifelike. Whether it’s AI voice cloning, auto-composed music, or Hollywood-grade sound design, generative audio systems are reshaping how sound is created, produced, and consumed.

Why Generative Audio AI Is Changing Everything

The world is becoming increasingly audio-driven. Podcasts, video content, audiobooks, virtual assistants, and even video games depend heavily on sound. Traditionally, voice recording needed studios, human actors, musicians, and audio engineers.

Generative Audio AI changes this completely.

With the right model, you can:

- Generate studio-quality voiceovers in seconds

- Create original music without a composer

- Produce realistic sound effects for films or games

- Clone your voice for content or accessibility

- Generate multilingual voice content instantly

This revolution is powered by advanced deep learning models that understand pitch, rhythm, tone, emotion, and human speech patterns at a microscopic level.

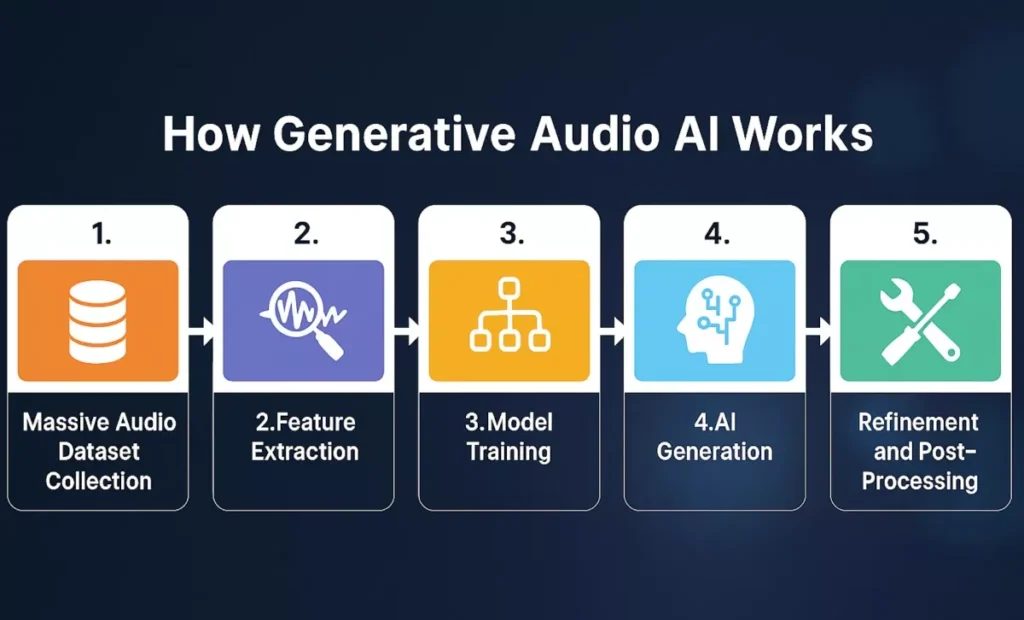

How Generative Audio AI Works

Generative Audio AI may feel magical, but behind the scenes, it follows a clear and structured process. Each step helps the AI understand sound deeply—how it moves, changes, and expresses emotion—so it can recreate audio with human-like realism. Below is a detailed breakdown of how the entire system works from start to finish.

1. Massive Audio Dataset Collection

The process begins with gathering huge libraries of audio. These datasets teach the AI what real-world sound looks and feels like.

AI models are trained on:

- Human speech recordings from different ages, accents, and emotions

- Various musical genres ranging from classical to EDM

- Individual instrument samples such as piano, guitar, drums, violin, and synths

- Environmental sound effects like rain, traffic, wind, footsteps, or crowd noise

By learning from diverse recordings, the AI forms a rich understanding of rhythm, tone, loudness, texture, pacing, and other characteristics that make audio feel authentic.

This dataset acts as the foundation of the model’s “sound knowledge.”

2. Feature Extraction

Once the audio is collected, the AI breaks each sound into small, detailed components. This is similar to zooming into audio under a microscope.

The model analyzes patterns such as:

- Frequencies – how high or low a sound is

- Waveforms – the shape of the sound over time

- Phonemes – the smallest units of speech

- Rhythm structures – timing, beats, and tempo

- Timbre – the unique quality that distinguishes one instrument or voice from another

Through this stage, the model learns audio behavior and gains the ability to recognize what makes a sound unique, expressive, or realistic.

3. Model Training

In this phase, deep learning models—often transformers or neural networks—study the patterns extracted in the previous step.

During training, the AI:

- Predicts what sound should come next in a sequence

- Learns emotional cues like excitement, sadness, calmness, or urgency

- Understands long-term structure in music or speech

- Refines its ability to generate smooth transitions

- Learns the difference between natural and artificial sound

This training process is similar to how language models learn to predict the next word—except here, the AI predicts audio patterns, tones, notes, and textures.

4. AI Generation

After the model finishes training, it gains the ability to generate original audio from scratch.

It can:

- Create brand-new voices that sound natural, human-like, and expressive

- Compose fresh melodies across different moods, genres, or tempos

- Produce cinematic ambience such as rainstorms, forests, or futuristic sci-fi soundscapes

- Mimic speech with emotion, replicating human-like pauses, breathing, and tone shifts

This is where the AI becomes truly creative, producing audio that can range from simple sound effects to fully-produced music tracks.

5. Refinement and Post-Processing

Raw AI-generated audio often needs polishing. Additional neural layers or special algorithms refine the sound to make it production-ready.

This phase includes:

- Noise reduction to remove unwanted background artifacts

- Pitch correction to ensure accuracy, especially for singing AI models

- Dynamic balancing so loudness stays consistent

- Quality enhancement to make the audio richer and clearer

By the end of this step, the output sounds smooth, natural, and ready for use in films, games, music, voiceovers, or applications.

Core Technologies Powering Generative Audio AI

This expanded overview dives into the foundational technologies that enable modern AI to create, transform, and understand sound. Each section explains how a class of models works, what it does best, and where it’s commonly applied in real-world audio systems.

A. Deep Neural Networks

Deep neural networks form the structural backbone of most generative audio systems. By training on vast, labeled and unlabeled audio collections, DNNs learn to detect extremely fine-grained patterns in frequency, timbre, timing, and dynamics.

- What they enable: accurate reproduction of voice tone and pitch; modeling of long-term musical form such as verse and chorus; synthesis of convincing acoustic textures like room reverberation and instrument body.

- Why they matter: DNNs provide the core representational power that downstream modules build on, and they appear in nearly every contemporary audio model, from speech enhancers to music synthesizers.

B. Generative Adversarial Networks

Generative adversarial networks excel at producing high-fidelity, realistic sound effects and short audio events. A GAN pairs a generator that proposes audio samples with a discriminator that judges realism, and the two networks iteratively improve until generated sounds are hard to distinguish from recordings.

- Typical outputs: footsteps, engine and mechanical noises, wind and water ambience, animal vocalizations.

- Strengths: exceptional realism for short, texture-rich sounds and fine-grained timbral detail that benefit games, film, and immersive audio design.

C. Transformers

Transformers brought a step change to audio modeling by enabling efficient learning of long-range dependencies and expressive timing. Their attention mechanisms let models consider distant context when producing each audio frame, which is crucial for natural-sounding speech and coherent musical phrases.

- Key capabilities: modeling emotional nuance and prosody, producing smooth transitions between phonemes and notes, preserving long-term structure across sentences or musical sections.

- Notable uses: state-of-the-art speech models and music generators rely on transformer architectures to deliver fluent, context-aware audio.

D. Text-to-Speech Engines with Neural Rendering

Neural TTS systems have moved far beyond monotone, robotic voices to produce expressive, human-quality speech. These engines combine linguistic analysis with neural waveform rendering to reproduce subtle human vocal traits.

- What neural TTS captures: realistic breath and micro-pauses, dynamic emphasis on words, a range of emotional tones, and the ability to infer pronunciation across multiple languages.

- Common applications: audiobooks, conversational agents and customer support bots, narrated video content, and accessibility tools that read text aloud with natural cadence.

E. Diffusion Models for Music and Voice

Diffusion models generate audio by progressively transforming noise into structured sound through a learned denoising process. This gradual refinement produces highly detailed outputs and gives designers fine control over the generation trajectory.

- What they produce best: lifelike singing voices, layered and evolving soundscapes, and high-resolution musical tracks with rich harmonic content.

- Research trend: diffusion-based approaches are rapidly gaining traction for music and voice synthesis because they balance fidelity with controllability.

F. Voice Cloning and Few-Shot Learning

Recent advances let models reproduce a speaker’s voice from very small samples of audio, enabling fast personalization and realistic voice transfer. Few-shot techniques can approximate a voice from just a few seconds, while larger datasets yield studio-grade clones.

- Typical data needs: a few seconds for a rough match, tens of seconds for higher fidelity, and full datasets for professional-level cloning.

- Applications: personalized virtual assistants, AI-driven influencers and content creators, automated dubbing for film and TV, and assistive technologies that restore a person’s voice.

G. Multimodal Audio Models

Multimodal models fuse audio with other signals such as text, images, MIDI, and motion data to generate context-aware sound. By combining modalities, these systems understand intent and environment, producing audio that aligns with visual or symbolic cues.

- How they work in practice: a video of rain can trigger generation of matching ambient sound; a written script can be rendered into emotionally appropriate dialogue; a short melody can be expanded into a full instrumental arrangement.

- Impact: multimodal integration enables more coherent, immersive, and semantically relevant audio for film, games, virtual production, and interactive media.

Applications of Generative Audio AI Across Industries

Generative Audio AI is transforming nearly every sector that relies on voice, sound, or music. From blockbuster films to personalized learning tools, its influence is expanding rapidly. Below is an in-depth look at how various industries are adopting and benefiting from this technology.

i. Media & Entertainment

Generative Audio AI is becoming a game-changer for film studios, streaming companies, and animation houses. Tasks that once took hours—or even entire teams—can now be automated with precision.

AI enables:

- Automated movie dubbing with multilingual, emotion-aware voices

- Realistic video game sound effects generated on the fly

- AI singers and music generation for films and ads

- Professional podcast voiceovers created instantly

These innovations not only lower production costs but also speed up timelines. Studios can create more content, experiment with more ideas, and deliver higher-quality audio without stretching their budgets.

ii. Content Creation

Independent creators are among the biggest beneficiaries of audio AI. With even simple tools, anyone can now produce high-quality audio content without advanced equipment or expensive talent.

Creators use AI to generate:

- Narrations for tutorials, reviews, and storytelling

- Explainer videos with clear, engaging voiceovers

- YouTube scripts read aloud with natural-sounding AI voices

This means creators can publish faster, maintain consistent audio quality, and scale their production—all without hiring voice actors or renting recording studios.

iii. Marketing & Advertising

Modern marketing requires speed, personalization, and global reach—and AI-generated audio helps brands achieve all three.

AI voices can:

- Match brand personality, from friendly to authoritative

- Speak multiple languages, supporting worldwide campaigns

- Maintain consistent tone across ads, platforms, and regions

This allows brands to produce ads, social media content, customer onboarding videos, or product announcements quickly, while keeping the same recognizable voice. The result? Faster turnaround and higher engagement.

iv. Accessibility & Assistive Technologies

Generative Audio AI is making digital experiences more inclusive and empowering for people with disabilities.

AI tools now provide:

- Custom AI-generated voices for individuals who have lost their voice

- Improved screen-reader voices that sound more natural and less robotic

- Real-time speech assistance for communication support

These advancements give users greater independence, more expressive communication, and a better connection with the digital world.

v. Gaming

The gaming industry relies heavily on immersive sound—and AI is elevating it to new heights.

Games now use generative audio for:

- Procedural soundscapes that evolve based on player actions

- AI-generated NPC dialogue that is dynamic and responsive

- Music that adapts to gameplay intensity or environment

This transforms the gaming experience into something more atmospheric, interactive, and deeply engaging for players.

vi. Music Production

Musicians, composers, and producers are using generative audio as a creative partner rather than a replacement.

AI assists artists by helping them:

- Generate chord progressions for new compositions

- Create unique melody ideas in any style or mood

- Produce full tracks with harmonies, beats, and layered arrangements

- Simulate instruments they don’t physically have access to

By removing creative blocks and offering infinite inspiration, AI becomes a powerful collaborator—expanding what artists can achieve, not limiting it.

Advantages of Generative Audio AI

- Speed and Productivity: Generative audio tools compress workflows: what once took hours in recording and editing can be produced in minutes, accelerating iteration and delivery.

- Lower Production Costs: By reducing reliance on studio time, specialized engineers, and multiple recording sessions, AI cuts overhead and makes high-quality audio affordable for smaller teams.

- Boundless Creative Exploration: AI can invent novel timbres, textures, and sonic combinations, enabling sound designers and musicians to explore ideas that would be difficult or impossible to create manually.

- Instant Multilingual Output: Voiceovers and spoken content can be generated across languages quickly, simplifying localization and expanding reach without lengthy re-recording processes.

- Reliable Consistency: AI maintains uniform tone, pacing, and style across takes and projects, ensuring brand voice and narration remain steady over time.

- Improved Accessibility: These tools make content more inclusive by providing natural-sounding narration, personalized voices, and assistive audio options for people with speech or hearing challenges.

Challenges and Ethical Considerations

Generative audio AI unlocks powerful creative and practical possibilities, but it also raises complex ethical, legal, and social questions. Below is a reorganized, detailed view of the main challenges, why each matters, and practical steps or considerations for mitigation.

Deepfake Voices

- Overview: AI can reproduce a person’s voice with startling fidelity, enabling realistic impersonations.

- Why it matters: Fraud and misinformation become easier when attackers can fabricate audio of public figures, private individuals, or trusted spokespeople. This undermines trust in media and can cause financial, reputational, or personal harm.

- Mitigations: Authentication tools (watermarks, provenance metadata), legal frameworks that criminalize malicious impersonation, and public awareness campaigns to teach people how to verify audio sources.

Copyright and Licensing Issues

- Overview: Models trained on existing music and recordings can produce outputs that closely resemble copyrighted works or reuse protected elements.

- Why it matters: Creators, labels, and rights holders may face loss of control and revenue, while users risk legal exposure for distributing or monetizing derivative audio.

- Mitigations: Clear licensing standards for training data, transparent model documentation about sources, automated similarity detection, and new licensing models that compensate original artists.

Job Displacement and Economic Impact

- Overview: Automation of voiceover, sound design, and some music production tasks can reduce demand for certain human roles.

- Why it matters: Professionals such as voice actors, session musicians, and audio engineers may see reduced opportunities or downward pressure on rates, shifting the structure of creative labor.

- Mitigations: Policies and business practices that support reskilling, fair compensation for human creators, hybrid workflows that combine human artistry with AI efficiency, and industry standards that credit human contributors.

Bias in Training Data

- Overview: If training datasets lack demographic, linguistic, or cultural diversity, generated audio will reflect those gaps.

- Why it matters: Outputs may misrepresent accents, dialects, gendered speech patterns, or musical traditions, reinforcing stereotypes and excluding underrepresented voices.

- Mitigations: Curate diverse, representative datasets; audit models for biased outputs; involve community stakeholders in dataset design; and provide controls that let users specify cultural or stylistic parameters.

Authenticity and Emotional Nuance

- Overview: AI can mimic surface-level prosody and timbre but still struggles with the deep, context-sensitive emotional subtleties of human performance.

- Why it matters: Audiences can detect inauthenticity in storytelling, acting, or intimate communication, which can reduce emotional impact and trust. Misapplied synthetic voices may also cause ethical discomfort when used without consent.

- Mitigations: Use synthetic voices transparently and ethically, reserve AI for appropriate contexts, combine human performers with AI tools for final output, and develop evaluation metrics that measure perceived authenticity.

Future of Generative Audio AI (2025–2032)

The next decade is set to redefine how humans interact with sound. As models grow more intelligent and datasets become richer, Generative Audio AI will evolve from a creative tool into a powerful companion capable of understanding emotion, context, and real-time intent. Below are the transformative changes we can expect between now and 2032.

A. Real-Time AI Voice and Sound Generation

Generative Audio AI will soon produce fully realistic voices instantly—even during live conversations or broadcasts.

This means:

- Real-time voice cloning in meetings or webinars

- Instant translation with natural emotional expression

- On-the-fly sound effects that match real-world events

Live entertainment, streaming, and communication will all shift toward AI-enhanced immediacy.

B. Fully AI-Composed Feature Film Soundtracks

By 2032, entire movie soundtracks and background scores may be composed by AI trained on decades of musical styles. These systems will:

- Mimic well-known composers

- Adapt music to the emotional beats of scenes

- Generate variations faster than traditional methods

Filmmakers will gain infinite flexibility when crafting cinematic experiences.

C. Emotionally Aware AI Voices

Future AI voices will not just speak; they will feel and respond.

Expect systems that:

- Adjust tone based on the listener’s mood

- Detect confusion or excitement and adapt their delivery

- Create personalized emotional dialogue for storytelling or support apps

This evolution will make AI interactions more human-like, empathetic, and engaging.

D. AI-Powered Sound Engines in VR/AR

Immersive technologies like virtual and augmented reality will rely heavily on generative audio to create believable worlds.

AI will enable:

- Hyper-realistic soundscapes that shift with movement

- AI-driven footsteps, echoes, and environmental effects

- Audio that responds dynamically to user behavior

This will make digital environments feel more alive and responsive than ever before.

E. AI as a Personal Music Composer

Generative models will act as personal music creators who understand your preferences better than any streaming platform. AI will:

- Create playlists synced to your heart rate, mood, or daily routine

- Produce custom soundtracks for meditation, workouts, or focus sessions

- Compose songs tailored to your voice or emotional state

Music becomes more personal, adaptive, and experiential.

F. Regulation and Authentication

As AI audio evolves, so will the need for transparency and safety. Future systems will include:

- Voiceprints that verify whether audio is human or AI

- Watermarking embedded in generated sound

- Strict ethical frameworks governing voice ownership and cloning

These safeguards will protect creators, users, and organizations from misuse.

Conclusion

Generative Audio AI is redefining how sound, voice, and music are created. By combining deep neural networks, transformers, GANs, diffusion models, and multimodal learning, AI can now produce audio that rivals human performance in quality, emotion, and realism. From entertainment and marketing to accessibility and education, its impact is reshaping every industry that depends on sound.

As technology advances, AI-driven audio will become more natural, more expressive, and more accessible to everyone—ushering in a new era of creativity powered by intelligent sound generation.

FAQs

1. What is Generative Audio AI?

Generative Audio AI is a type of artificial intelligence that creates new audio—such as human-like voices, realistic sound effects, and original music—by learning patterns from large audio datasets. It can mimic natural speech, compose melodies, and produce soundscapes automatically.

2. How does Generative Audio AI create realistic voices and music?

It works by analyzing massive amounts of recorded audio, breaking it into patterns (like pitch, rhythm, and phonemes), and using deep learning models such as transformers, GANs, and diffusion models. These models then generate new sound based on what they’ve learned, resulting in natural and high-quality audio.

3. What are the main technologies behind Generative Audio AI?

Key technologies include neural networks, transformer architectures, GANs (Generative Adversarial Networks), diffusion models, text-to-speech (TTS) systems, and few-shot voice cloning frameworks. These systems work together to produce lifelike voices, immersive sound effects, and AI-generated music.

4. What can Generative Audio AI be used for?

It is used in filmmaking, gaming, podcasting, content creation, advertising, accessibility tools, audiobooks, virtual assistants, and music production. Industries use it to automate voiceovers, create sound effects, generate background tracks, and personalize audio experiences.

5. Is Generative Audio AI safe and ethical to use?

Yes, but it requires responsible use. While the technology is powerful, concerns include deepfake voices, misuse of cloned voices, copyright issues, and data privacy. Many companies are developing tools like voice watermarks, consent systems, and authenticity checks to ensure ethical and safe usage.