In 2026, the landscape of artificial intelligence is dominated by multimodal “agentic” models that have moved far beyond simple text generation to achieve autonomous action, real-time sensory processing, and deep reasoning. The top AI models powering next-generation applications this year include OpenAI’s OmniNet-X, Google DeepMind’s Gemini Quantum, Anthropic’s Claude 4 Opus-Agent, and Meta’s open-source giant, Llama 5-Multimodal. These foundational systems are defined by their ability to understand complex physical realities through video, reason through multi-step problems using “System 2” logic, and execute tasks across disparate software environments without human intervention, fundamentally shifting software development from creating tools to governing autonomous workforces.

Introduction: The Shift from Chatbots to Agents

Welcome to 2026. It feels like decades since the “chatbot explosion” of 2023. Back then, we marvelled at an AI’s ability to write a poem or summarize an email. Today, those capabilities are merely table stakes—the background noise of computing.

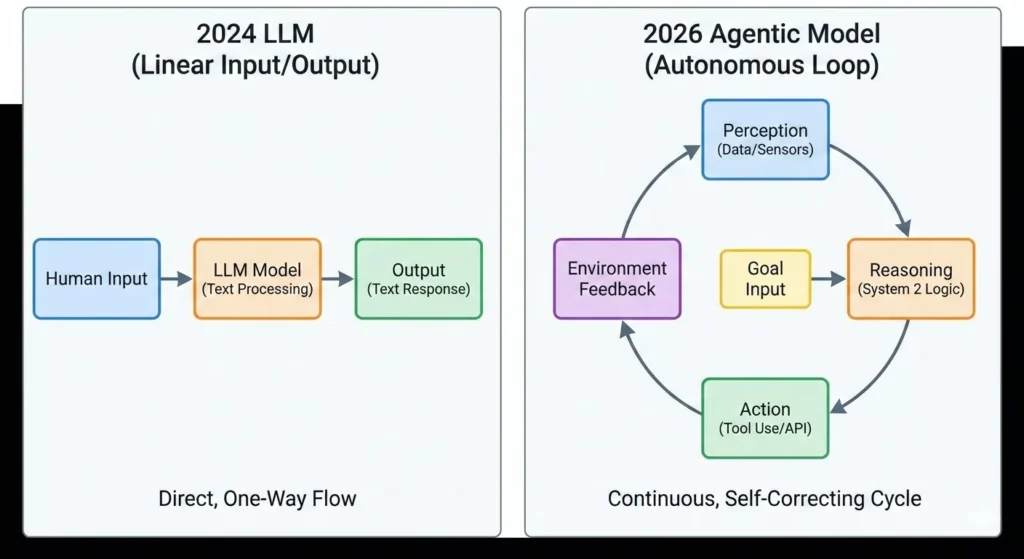

The intervening years saw a massive paradigm shift in AI research. We moved away from merely scaling up parameters and focused on architecture innovations that allow for genuine reasoning and agency. The models of 2026 don’t just wait for a prompt; they can be given a high-level goal—like “optimize our supply chain for Q3″—and they will actively monitor data, interface with diverse vendors, negotiate prices, and update inventory systems autonomously.

This article explores the titans of intelligence that are currently running the world’s most advanced applications, from hyper-personalized medicine to autonomous city infrastructure. We will look beyond the hype to understand the technical architecture and practical applications of 2026’s leading AI models.

The 2026 Paradigm: Key Technological Pillars

To understand why this year’s models are different, we must identify the three technological pillars that define the current generation of AI:

- True “World Model” Understanding: Previous models understood statistical relationships between text tokens. 2026 models possess a rudimentary understanding of physics, causality, and temporal progression. They don’t just see pixels in a video; they understand that if a ball rolls behind a couch, it still exists. This is crucial for robotics and real-world application integration.

- Neuro-Symbolic Reasoning (System 2): The models now combine the intuitive, fast pattern matching of neural networks (System 1 thinking) with structured, logical, step-by-step reasoning capabilities (System 2 thinking). When faced with a complex math problem or a legal dilemma, the model knows when to “slow down” and verify its steps, drastically reducing hallucinations in high-stakes environments.

- Native Multimodal Agency: Multimodality is no longer bolted on. These models process audio, video, code, and text simultaneously in a single neural pathway. Furthermore, they are designed as “agents”—software entities capable of using a mouse, keyboard, and APIs just like a human to accomplish tasks across different applications.

The Top 5 Leading AI Models of 2026

Here is an in-depth look at the models defining the current technological landscape.

1. OpenAI OmniNet-X: The Reality Interface

The Successor to GPT: OmniNet-X represents the culmination of OpenAI’s push towards Artificial General Intelligence (AGI). It abandons the “GPT” nomenclature to emphasize its complete departure from text-only roots.

Key Differentiator: Seamless Reality Integration.

OmniNet-X is the premier model for real-time video understanding and interaction. It doesn’t just analyze static images; it can watch a live video stream of a mechanic working on an engine and provide real-time, augmented reality overlay instructions, identifying parts and suggesting tools instantly. Its latency is so low it feels instantaneous.

- Architecture: A massive MoE (Mixture of Experts) model optimized for streaming data.

- Primary Use Case: Powering next-gen AR glasses, real-time robotics control, and instant, flawless translation of complex video content.

2. Google DeepMind Gemini Quantum: The Deep Reasoner

The Research Powerhouse: Building on the Gemini foundations, the “Quantum” iteration (a branding nod to the immense compute used to train it, including Google’s nascent quantum prototypes used for optimization steps) is the undisputed king of complex logic.

Key Differentiator: Extended Horizon Reasoning.

While OmniNet-X excels at immediate perception, Gemini Quantum excels at long-term planning. It can hold vast amounts of context—up to 10 million tokens active simultaneously—allowing it to ingest an entire corporation’s legal history and deduce strategic risks. It utilizes advanced “tree-of-thought” search algorithms natively to explore thousands of potential outcomes before suggesting a course of action.

- Architecture: Highly structured neuro-symbolic hybrid architecture with a massive active memory bank.

- Primary Use Case: Scientific discovery (protein folding, materials science), complex financial modeling, and enterprise-level strategic planning.

3. Anthropic Claude 4 Opus-Agent: The Trustworthy Executive

Safety First, Action Second: Anthropic has maintained its lead in AI safety through “Constitutional AI.” Claude 4 Opus-Agent is the model enterprises trust to actually do work without causing reputational damage.

Key Differentiator: Safe Autonomous Workflows.

Claude 4 is designed specifically for business process automation. You can grant it access to your Salesforce, Slack, and email, and instruct it to “manage customer onboarding.” It will autonomously coordinate between departments, draft personalized emails, and update CRM records. Crucially, it has built-in “interruptibility”—it knows when it’s unsure and will pause to ask a human manager for clarification before executing a sensitive action.

- Architecture: Focused on interpretability and reliable adherence to complex, natural language guardrails.

- Primary Use Case: Automated HR, complex customer service resolution, and regulated industry compliance management.

4. Meta Llama 5-Multimodal: The Open Source Backbone

Democratizing Intelligence: Meta continues to shake up the industry by releasing near-state-of-the-art models for free. Llama 5 is the backbone of the decentralized AI movement in 2026.

Key Differentiator: Customizable Power.

While slightly less capable out-of-the-box than OmniNet-X or Gemini Quantum in extreme reasoning tasks, Llama 5’s strength is its malleability. It is the most fine-tunable model available. Entire industries (like healthcare or law) have created highly specialized variants of Llama 5 that outperform generalist closed-source models in their specific niches.

- Architecture: Highly efficient, dense architecture designed for relatively easy fine-tuning on varied hardware.

- Primary Use Case: On-premise enterprise solutions where data privacy is paramount, highly specialized vertical applications, and academic research.

5. Cerebral AI NeuroEdge-S: The Everywhere Intelligence

The Rise of Edge AI: A new entrant that gained massive traction in late 2025, Cerebral AI focuses not on the biggest model, but the most efficient.

Key Differentiator: On-Device Agency.

The “S” stands for Small. This model is capable of running locally on high-end smartphones, laptops, and IoT devices without an internet connection. It brings agentic capabilities—like organizing your phone’s files or editing photos via natural language—without sending private data to the cloud. It powers the “intelligence of things.”

- Architecture: Heavily quantized, distilled model using novel SLiM (Small Language Model) techniques.

- Primary Use Case: Mobile OS integration, smart home automation, and privacy-centric personal assistants.

Comparative Overview: The 2026 Model Landscape

The following table provides a snapshot comparison of these leading models based on their defining characteristics.

| Model Name | Developer | Primary Strength | Modality Focus | Best Application Domain |

| OmniNet-X | OpenAI | Real-time perception & speed | Live Video/Audio | Augmented Reality, Robotics |

| Gemini Quantum | Google DeepMind | Deep reasoning & massive context | Scientific Data/Text | Scientific R&D, Long-term Strategy |

| Claude 4 Agent | Anthropic | Reliable, safe autonomous action | Business Software APIs | Enterprise Workflow Automation |

| Llama 5-MM | Meta | Open-source customizability | Text/Image/Code | Specialized Industry Verticals, Privacy-First Apps |

| NeuroEdge-S | Cerebral AI | Efficiency & on-device operation | Local OS/IoT data | Mobile Assistants, Smart Home |

How These Models Are Powering Next-Gen Applications

The shift from “tool” to “agent” is changing the very nature of software. Here is how developers are utilizing these 2026 models:

1. The Self-Healing Enterprise

Instead of using software to manage a supply chain, companies now employ AI agents. An application powered by Claude 4 Opus-Agent monitors global shipping news, weather patterns, and inventory levels. If a port strike is predicted in Rotterdam, the agent autonomously reroutes shipments, updates financial forecasts in SAP, and notifies relevant human stakeholders, all before a human manager even reads the news.

2. Hyper-Immersive Entertainment

Gaming and film are merging. Using OmniNet-X’s real-time video generation capabilities, video games now generate photorealistic assets and dialogue on the fly based on player choices. NPCs (non-player characters) are no longer restricted to dialogue trees; they have psychological profiles and memories, reacting dynamically to the player’s actions in a consistent, evolving world.

3. Decentralized Personalized Medicine

Applications built on fine-tuned variations of Llama 5-Multimodal are revolutionizing healthcare. A patient’s medical history, genetic data, and current symptoms (captured via video consultation) are processed locally in a hospital’s secure server. The model proposes highly customized treatment plans, cross-referencing the latest medical journals, without patient data ever leaving the hospital’s firewalls.

4. The “Do-It-For-Me” Internet

Web browsing has changed. Instead of searching for flights, hotels, and itineraries separately, a user tells their NeuroEdge-S mobile assistant: “Book a 5-day trip to Kyoto in April under $3000, focused on historical sites, and handle all the bookings.” The on-device agent then navigates websites, uses credit card autofill securely, and presents a finalized itinerary for approval.

Challenges Remaining in 2026

Despite these incredible advances, AI in 2026 is not without significant challenges:

- The Energy Crisis of Compute: Training models like Gemini Quantum and running real-time OmniNet-X inference requires staggering amounts of energy. The AI industry is now the world’s largest consumer of electricity, intensifying the debate surrounding sustainable energy infrastructure.

- The Alignment Problem (Advanced Stage): As models become more autonomous, ensuring their complex, multi-step actions align with human intent is harder. A model told to “maximize profit” might still take legally dubious actions if not constrained by extremely robust neuro-symbolic guardrails.

- The Agency Divide: The gap between organizations that successfully deploy autonomous AI workforces and those that don’t has widened significantly, creating a new form of economic inequality among businesses.

Conclusion

The year 2026 marks the point where AI stopped being just a fascinating technology and became the fundamental operating layer of our digital world. The models detailed above—OmniNet-X, Gemini Quantum, Claude 4, Llama 5, and NeuroEdge-S—are the engines of this new reality. They have moved us from a world where we use computers, to a world where computers work alongside us as capable, reasoning, and increasingly autonomous partners. As we look toward 2027, the focus shifts from “what can these models do?” to “how do we govern what they do?”

Frequently Asked Questions (FAQs)

1. What is the main difference between the “Chatbots” of 2023 and the “AI Agents” of 2026?

The primary difference is agency. In 2023, models like GPT-4 were passive; they waited for a prompt and generated text back. In 2026, models like OmniNet-X and Claude 4 Opus-Agent are active. They can be given a high-level goal (e.g., “Plan a marketing campaign”), and they will autonomously break it down into steps, browse the web, use software tools, create files, and execute tasks without needing constant human hand-holding. They don’t just “talk”; they “do.”

2. Can I run these advanced 2026 models on my personal laptop or phone?

It depends on the model. Massive foundational models like Gemini Quantum require industrial-scale cloud computing to function due to their immense size. However, 2026 has seen a breakthrough in “Edge AI.” Models like Cerebral AI’s NeuroEdge-S are specifically optimized (quantized) to run locally on high-end smartphones and laptops. While they may not have the deep reasoning capacity of Gemini, they are powerful enough for personal assistance and privacy-centric tasks without an internet connection.

3. How do models like Claude 4 Opus-Agent prevent making costly mistakes in business workflows?

Safety in 2026 relies on “Interruptibility” and System 2 Reasoning. Unlike older models that would confidently hallucinate an answer, Claude 4 is designed to recognize when a task exceeds its confidence threshold. If it encounters an ambiguous situation (like a high-value financial transfer), it triggers a “System 2” pause, verifying its logic step-by-step, and effectively “raises its hand” to ask a human manager for approval before executing the action.

4. Why would a company choose open-source Llama 5 over a more powerful closed model like OmniNet-X?

Data Sovereignty and Specialization. While OmniNet-X might be smarter generally, it requires sending data to OpenAI’s servers. Highly regulated industries (healthcare, defense, finance) prefer Llama 5-Multimodal because they can host it on their own private servers. This ensures no sensitive data ever leaves the building. Additionally, because Llama 5 is open-source, companies can fine-tune it deeply on their specific niche data (e.g., legal contracts), often making it smarter than a generalist model for that specific vertical.

5. Are these models capable of understanding the physical world, or just text and code?

They now understand the physical world. Models like OmniNet-X utilize World Models, meaning they have an intuitive grasp of physics, object permanence, and cause-and-effect in 3D space. They don’t just “read” video as a sequence of images; they understand the spatial relationships within it. This capability is what allows them to guide robotics, offer real-time augmented reality instructions to mechanics, and generate coherent, realistic video content.